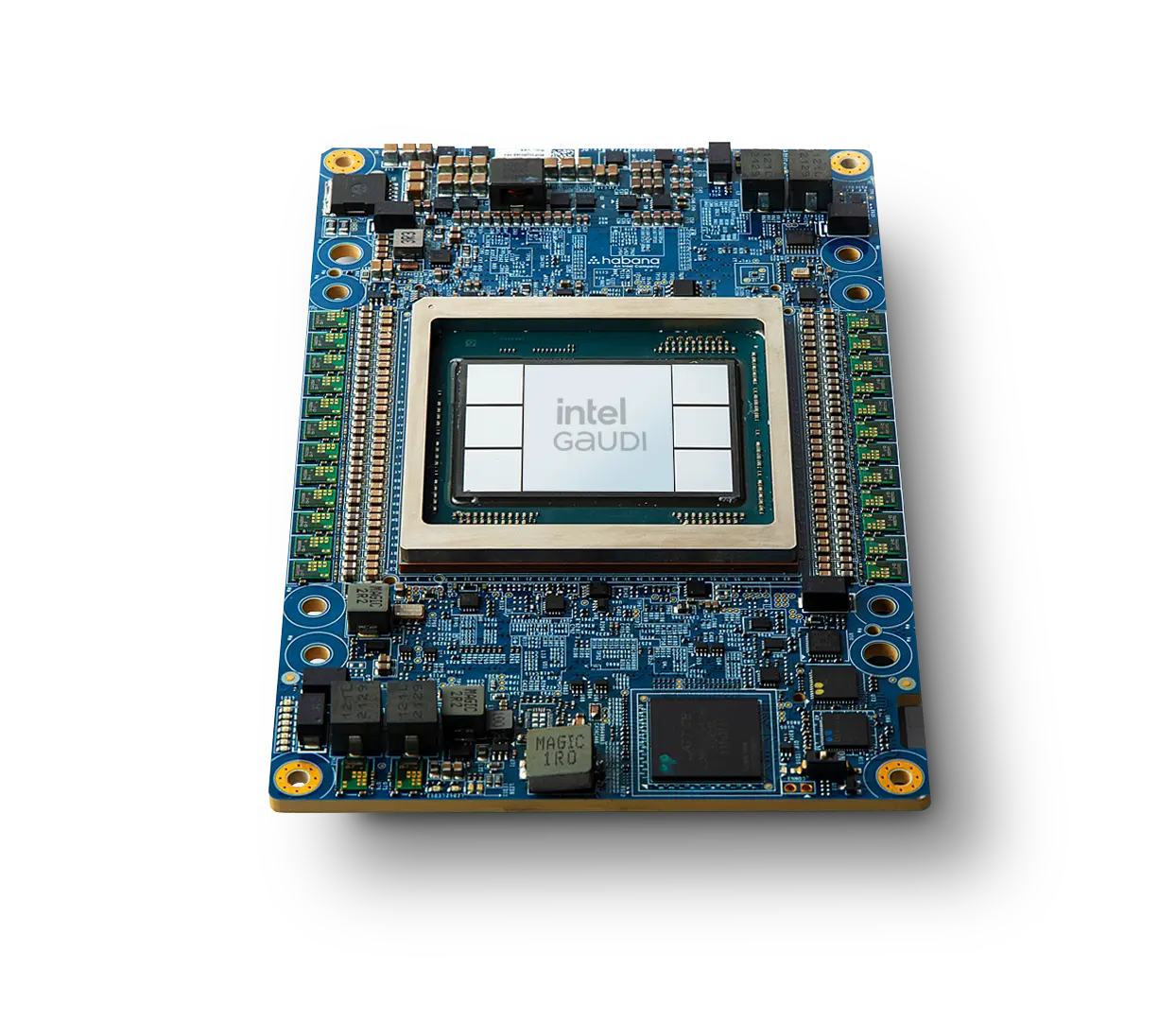

Intel®Gaudi® 2

AI accelerator

High Performance

Acceleration for GenAI

and LLMs

Our Intel® Gaudi® 2 AI accelerator

is driving improved deep learning price-performance

Intel Gaudi 2 AI architectural features:

- 7nm process technology

- Heterogeneous compute

- 24 Tensor Processor Cores

- Dual matrix multiplication engines

- 24 100 Gigabit Ethernet integrated on chip

- 96 GB HBM2E memory on board

- 48 MB SRAM

- Integrated Media Control

Intel Gaudi 2 AI accelerator in the Cloud

Discover the performance and ease of use Intel Gaudi 2 AI accelerator provides on the

Intel Developer Cloud.

And coming soon: Intel Gaudi 2 accelerators on the Stability.AI Cloud.

Intel Gaudi 2 AI accelerator in the Data Center

Bring the price-performance advantage into your infrastructure with solutions from Supermicro and IEI.

Learn moreIntel Gaudi 2 AI accelerator

Training and Inference Performance delivers choice.

Based on MLPerf Training 3.0 Results published June 2023, Intel Gaudi 2 AI accelerator is the ONLY viable alternative to H100 for training

large language models like GPT-3. Read the details >

In addition to the MLPerf industry benchmark, Intel Gaudi 2 AI accelerator scores on other third-party evaluations.

Visit https://habana.ai/habana-claims-validation for workloads and configurations. Results may vary. https://huggingface.co/blog/habana-gaudi-2-benchmark

https://huggingface.co/blog/habana-gaudi-2-bloom

Intel Gaudi 2 AI accelerator supports massive, flexible scale out.

With 24x 100 Gigabit Ethernet (RoCEv2) ports integrated onto every Intel Gaudi 2 AI accelerator, customers benefit from flexible and cost-efficient scalability that extends performance of the Intel Gaudi 2 AI accelerator from one to thousands of accelerators.

Figure1: Server reference design featuring 8x Intel Gaudi 2 accelerators

For more information on building out system scale with Intel Gaudi 2 AI accelerator, see our Networking with Gaudi page >

Easily build new or migrate

existing models on Intel Gaudi 2

AI accelerators

Intel Gaudi software, optimized for Intel Gaudi platform performance and ease, gives developers the documents, tools, how-to content and reference models to help them get started on Intel Gaudi software quickly and with ease.

Access and easily implement over 50,000 models with Habana Optimum Library on the Hugging Face hub.

For more information, see our developer site >