Intel®Gaudi® 3

AI accelerator

Big for Gen AI,

Even Bigger for ROI

For GenAI, the Genuine Alternative.

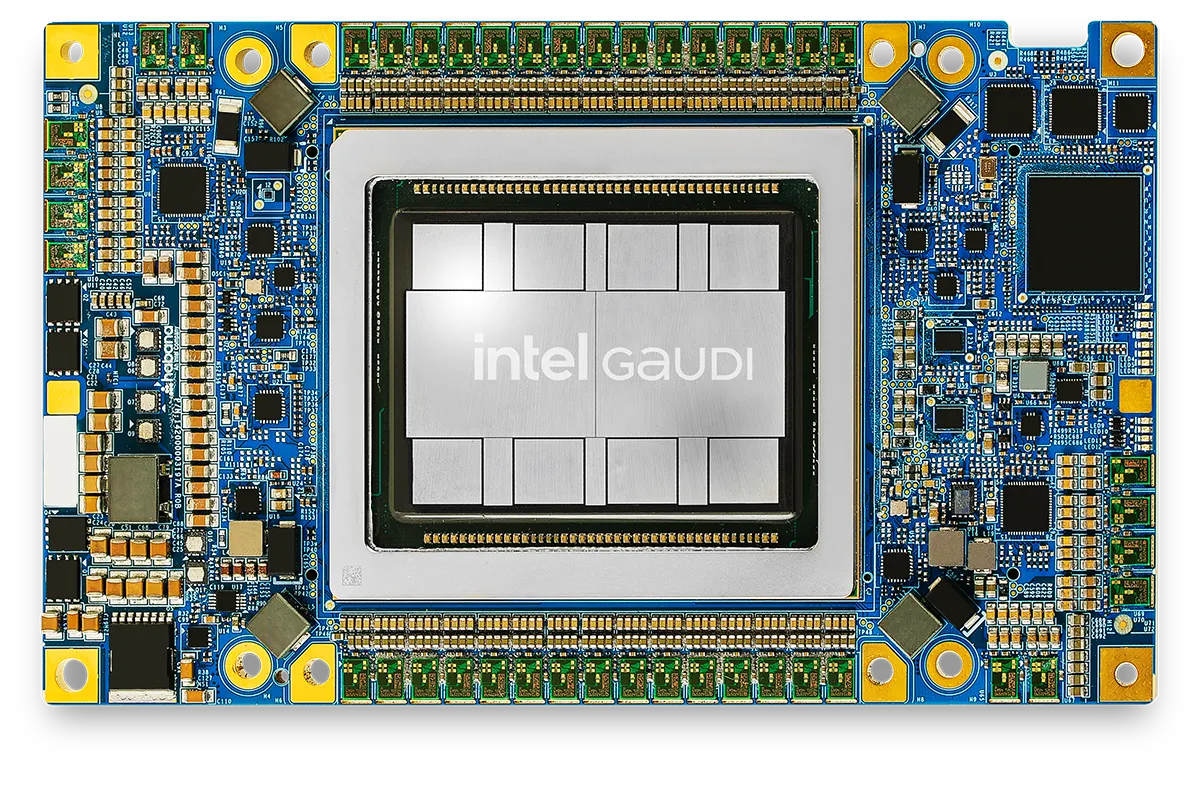

Introducing the Intel® Gaudi® 3 AI accelerator.

With performance, scalability and efficiency that gives more choice to more customers, Intel® Gaudi 3® accelerators help Enterprises unlock insights, innovations and income.

Learn more

With performance, scalability and efficiency that gives more choice to more customers, Intel® Gaudi 3® accelerators help Enterprises unlock insights, innovations and income.

With the growing demand for generative AI compute, has come increasing demand for solution alternatives that give customers choice1234. The Intel® Gaudi 3® AI accelerator is designed to deliver choice with:

Price-Performance Efficiency

Great price-performance so enterprise AI teams can train more, deploy more and spend less.

Massive Scalability

With 24 x 200 GbE ports of industry-standard Ethernet built into every Gaudi 3 accelerator, customers can flexibly scale clusters to fit any AI workloads—from a single rack to multi-thousand-node clusters.

Easy-to-use Development Platform

Gaudi software is designed to enable developers to deploy today’s popular models with open software that integrates the PyTorch framework and supports thousands of Hugging Face transformer and diffusion models.

Support by Intel AI Cloud

Developers can play on the Intel Gaudi AI cloud to learn how easy it is to develop and deploy generative AI models on the Gaudi platform.

Gen over Gen advancements

2x

AI compute(FP8)

4x

AI compute(BF16)

2x

Network Bandwidth

1.5x

Memory Bandwidth

*Specification advances of Intel® Gaudi® 3 accelerator vs. Intel® Gaudi® 2 accelerator

Intel® Gaudi® 3 accelerator performance vs. Nvidia GPUs

1.5x faster time-to-train

than NV H100 on average1

1.3x faster inference

than NV H200 on average2

1.5x faster inference

than NV H100 on average3

1.4x higher inference power efficiency

than NV H100 on average4

Efficiency, performance and scale for data center AI workloads

Intel® Gaudi® 3 AI accelerators support state-of-the-art generative AI and LLMs for the datacenter and pair with Xeon® Scalable Processors, the host CPU of choice for leading AI systems, to deliver enterprise performance and reliability.

3D Generation

Video Generation

Image Generation

Text Generation

Sentiment

Summarization

Q&A

Classification

Translation

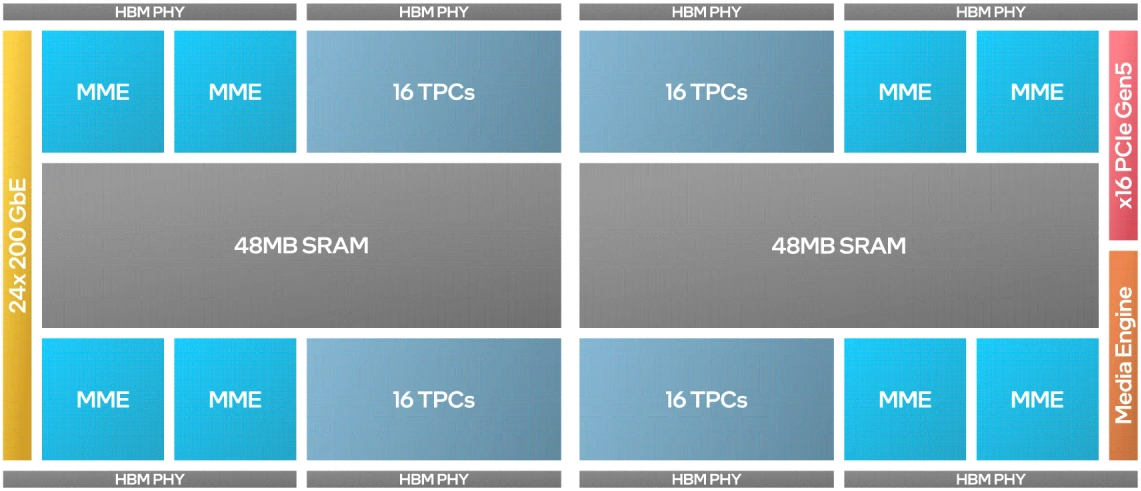

Architected at Inception for Gen AI Training and Inference

for every 2 MME and 16 TPC unit

The Intel® Gaudi® 3 accelerator challenges the industry’s legacy performance leader with speed and efficiency born from its AI compute design.

The Intel® Gaudi® 3 accelerator architecture features heterogenous compute engines—eight matrix multiplication engines (MME), 64 fully programmable Tensor Processor Cores (TPCs) and supports popular data types required for deep learning: FP32, TF32, BF16, FP16 & FP8. For details on how

the Intel® Gaudi® 3 accelerator architecture delivers AI compute performance more efficiently.

See the whitepaper >

Enhanced Networking and Scaling

– Open industry standard Ethernet networking

– Enjoy choice, avoid lock-in of proprietary networking fabrics

– 33%* more I/O peak throughput on each Intel® Gaudi® 3 accelerator for robust scale-up

*Nvidia H100 GPU (900 GB/s closed NVLink connectivity) vs. Intel® Gaudi® 3 accelerator (1200 GB/s open standard RoCE).

Scale large systems,

scale great performance.

Great networking performance starts at the processor where Intel® Gaudi® 3 accelerator integrates 24 200 Gigabit Ethernet ports on chip, enabling more efficient scale up in the server and massive scale out capacity for cluster-scale systems that support blazing-fast training and inference of models— large and small. Near-linear scalability of Intel® Gaudi® networking preserves the cost-performance advantage, whether you’re scaling out four nodes or four hundred. For more information about scaling Intel® Gaudi® accelerators, see the whitepaper >

Putting performance into practice has never been this easy.

Intel® Gaudi® software eases development with integration of the PyTorch framework, the foundation of the majority of Gen AI and LLM development. Code migration on PyTorch from Nvidia GPUs to Intel® Gaudi® accelerators requires only 3 to 5 lines of code. Intel® Gaudi® software on the Optimum Habana Library also gives developers easy access to thousands of popular gen transformer and diffusion models on the Hugging Face hub. For more information developing on Intel® Gaudi® software, read on >

You’ve got choice with Intel® Gaudi® 3 accelerators

Every enterprise has different AI compute requirements with many considerations—desired system performance, scale, power, footprint and more.

To address your enterprise’s specific needs,

Intel® Gaudi® 3 accelerator provides these hardware options:

Intel® Gaudi® 3 accelerator provides these hardware options:

Intel® Gaudi® 3 mezzanine card: HL-325L (air cooled)

Intel® Gaudi® HL-335 Mezzanine (liquid cooled)

Intel® Gaudi® HL-335 Mezzanine (liquid cooled)