Intel®Gaudi®

software

Designed to meet Gen AI developers where you are

Intel® Gaudi® software suite

Intel Gaudi software goal: ease migration

of existing software to Intel Gaudi AI accelerators, preserving software investments, and making it easy to build new models—for both training and deployment of the numerous and growing models defining deep learning, generative AI and large language models.

Ready to Use Intel Gaudi software ?Simplified development the way you want to develop

optimized for deep learning training and inference

Intel Gaudi software suite

integrating frameworks, tools, drivers and libraries

We offer extensive support for data scientists, developers,

and IT and Systems Administrators with:

Developer Site: featuring documentation, customer-available software, “how to” content, community Forum

GitHub: providing reference models, set-up and install

instructions, snapshot scripts for analysis and debug, custom kernel

examples, roadmap, and “issues” sub-site tracking to dos, bugs,

feature requests and more

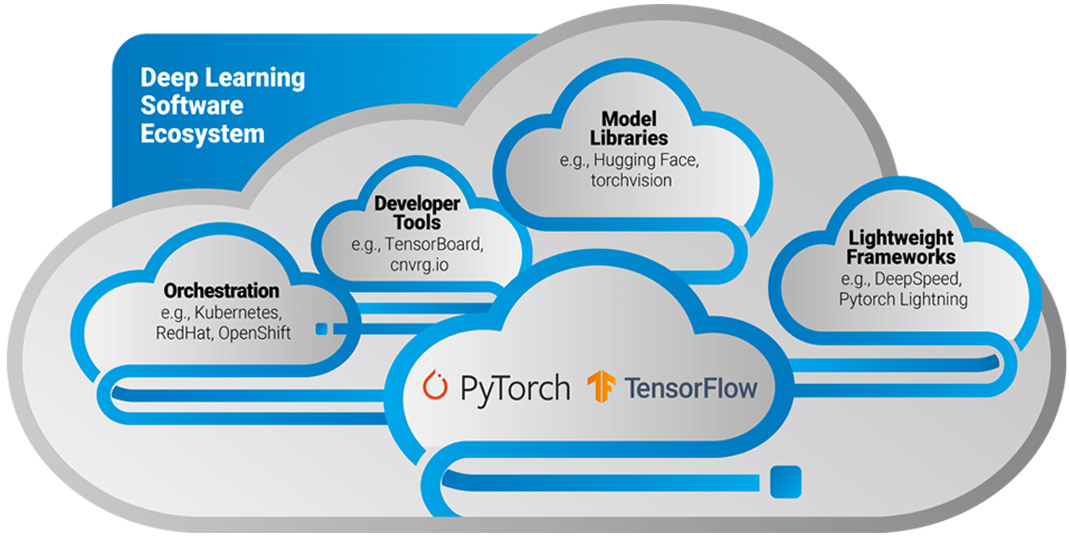

Intel Gaudi AI accelerator

Software ecosystem

for deep learning

Hugging Face: over 50,000 AI models and

90,000+ GitHub stars. Habana Optimum library

on Hugging Face provides customers using Intel Gaudi

AI accelerators to access to the entire

Hugging Face model universe. Checkout a list of

Hugging Face Habana optimized models here.

Lightning: acceleration of PyTorch deep learning workloads

Deep Speed: easy-to-use deep learning

optimization software that enables scale and

speed with particular focus on large scale models

Cnvrg.io: MLOps support for customers

using Intel Gaudi processors

Solutions focused on

Business and End-user Outcomes

revenue and deliver rich consumer experiences.

For more information on how Intel Gaudi AI accelerators are enabling high-value applications using computer vision, NLP,

generative AI, large-language and multi-modal models, see our industry solutions >