Purpose-Built

AI Processors

for the cloud

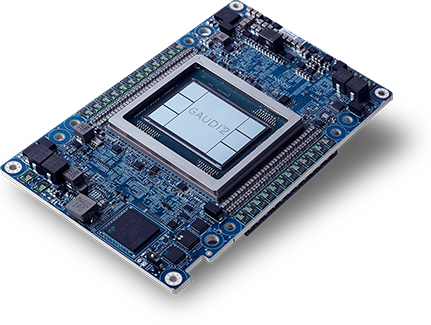

Intel Gaudi processors are designed to deliver

cost-efficient and easy-to-implement AI workloads in the cloud

Efficiency

With the demands of generative AI and large language models for massive and efficient AI compute, Intel® Gaudi® processors offer proven, price/performance advantages to cloud service providers, enabling them to deliver cost-efficient training and inference at any scale. SynapseAI® software and Intel Gaudi accelerator hardware were architected for training and inference performance and efficiency, so end-users can train and deploy larger data sets and retrain models more frequently, increasing deep learning workload accuracy and applicability.

Usability

The Intel team is committed to making it easy for cloud service providers and their end-customers to develop and deploy Intel Gaudi accelerator models with relative ease and support. Our SynapseAI® software platform integrates PyTorch and TensorFlow frameworks, and supports the growing array of generative AI, large language, computer vision and natural language models. We provide data scientists and developers with documentation, “how to” guides and videos, community and Intel Gaudi accelerator support forums, and tools on the Habana Developer Site and on the Habana GitHub.

Scalability

Twenty-four 100-Gigabit ports of RDMA over Converged Ethernet (RoCE) are integrated into every Intel Gaudi 2 processor, giving cloud providers flexible and expandable networking capacity, enabling optimization of networking within the server node with all-to-all processor connectivity. And, integrated RoCE on every Intel Gaudi 2 accelerator gives CSPs and their customers a near-infinite array of cost-effective options for scaling out across nodes and racks, with versatile AI compute capacity based on affordable, industry-standard Ethernet technology.

We are proud to partner with AWS to deliver the industry’s most cost-efficient AI training instances with the new Amazon EC2 DL1 instances.

See the DetailsScaling cloud capacity

on Intel Gaudi accelerators with integrated RoCE

- All-to-all connectivity optimizes network capacity within the node

- Industry standard RoCE offers efficient scale-out across nodes and racks.

6 X 400 GbE

scale-out node

- 7 100-GbE ports for all-to-all connectivity

- u 3 100-GbE ports for scale-out

- u 42 training nodes in Voyager

Seven 6-node racks

252 X 400GbE QSFP-DD

- Connect to centralized networking switch