Intel®Gaudi®

AI accelerator

First Generation

Deep Learning Training

& Inference Processor

For leading price performance in the cloud and on-premises

Intel Gaudi AI accelerator in the Cloud

Get started on Amazon EC2 DL1 Instances featuring Intel Gaudi AI accelerators

Intel Gaudi AI accelerator in the Data Center

Build Intel Gaudi AI accelerators into your data center with Supermicro

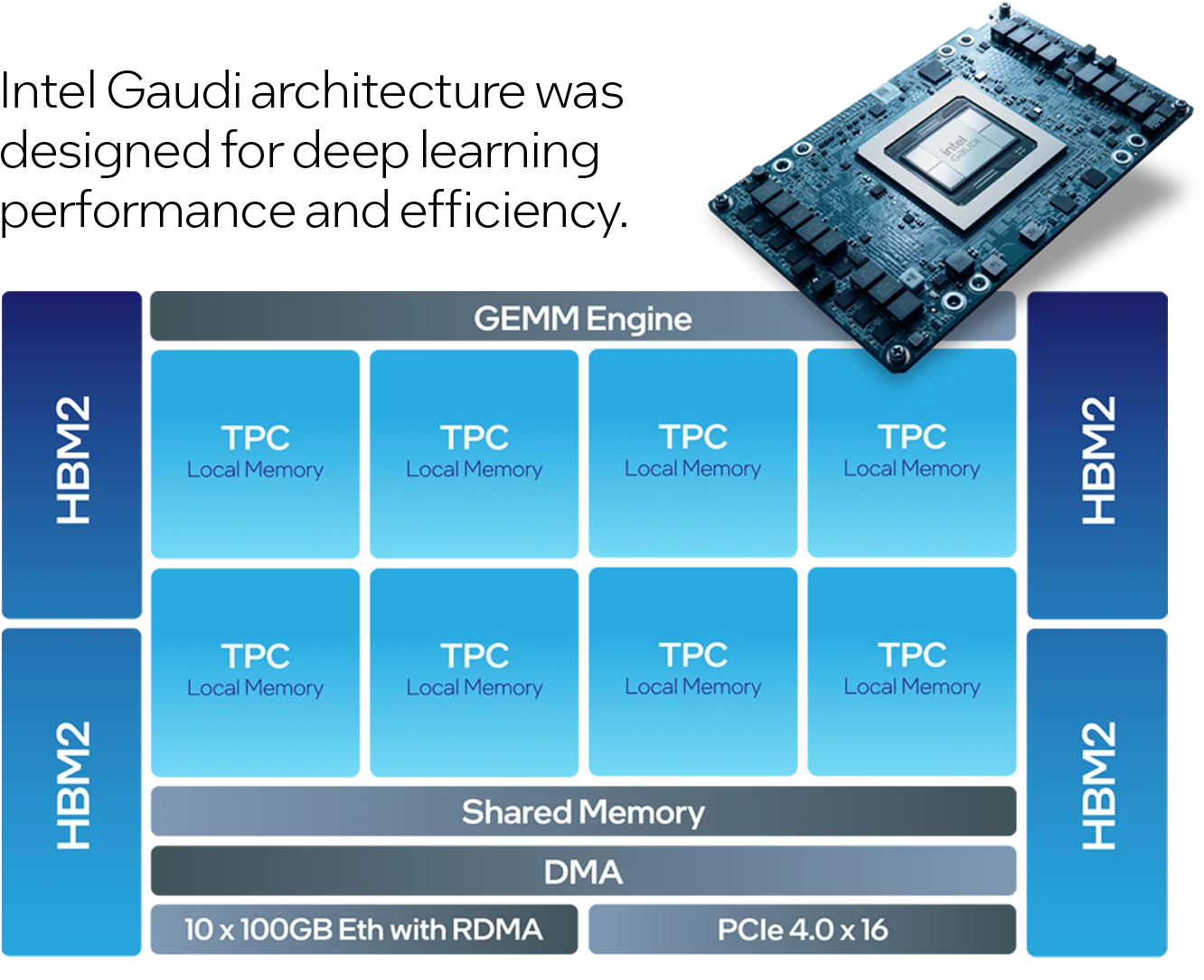

What makes the Intel Gaudi AI accelerator so efficient?

Process technology

Matrix multiplication engine

Tensor processor cores

Onboard HBM2

100G Ethernet ports

Massive and flexible system scaling with

Intel Gaudi AI accelerator

Every first-generation Intel Gaudi AI processor integrates ten 100 Gigabit Ethernet ports of RDMA over Converged Ethernet (RoCE2) on chip to deliver unmatched scalability, enabling customers to efficiently scale AI training from one processor to 1000s to nimbly address expansive compute requirements of today’s deep learning workloads.

Options for building Intel Gaudi AI accelerator systems on premises

For customers who want to build out on-premises systems, we recommend the Supermicro X12 Server, which features eight Intel Gaudi AI processors. For customers who wish to configure their own servers based on Intel Gaudi AI accelerators, we provide reference model options, the HLS-1 and HLS-1H.

For more information on these server options, please see more details >

Making developing on Intel Gaudi AI accelerators fast and easy:

Intel Gaudi Software

Optimized for deep learning model development and to ease migration of existing GPU-based models to Intel Gaudi AI platform hardware. It integrates PyTorch and TensorFlow frameworks and supports a rapidly growing array of computer vision, natural language processing and multi-modal models. In fact, over 200K models on Hugging Face are easily enabled on Intel Gaudi accelerators with the Habana Optimum software library. Getting started with model migration is as easy as adding 2 lines of code, and for expert users who wish to program their own kernels, the Intel Gaudi platform offers the full tool-kit and libraries to do that as well. Intel Gaudi software supports training and inference of models on first-gen Intel Gaudi accelerators and Intel Gaudi 2 accelerators.

For more information on how the Intel Gaudi platform is making it easy to migrate existing or building new models on Gaudi, see our SynapseAI product page >