Purpose-Built

AI Processors

in the Data Center

Efficiency

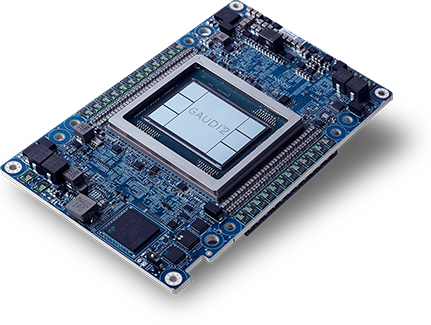

Deep learning training and inference on first- and second-generation Intel Gaudi AI accelerators benefit from substantial price/performance advantage – so you get more training and inference compute while spending less for your on-premises deployments. Architected from the ground up and optimized for deep learning performance and efficiency, Intel Gaudi platform solutions enable more customers to run more deep learning workloads with speed and performance, while containing operational costs.

Usability

We’re focused on giving data scientists, developers and IT and systems administrators all they need to ease the development and deployment on-premise of solutions based on Intel Gaudi AI accelerators. Our SynapseAI® Software Platform is optimized for building and implementing deep learning models on Intel Gaudi accelerators on PyTorch and TensorFlow frameworks on a wide array of models including generative AI, large language, computer vision and natural language models. And the Habana Developer and Habana GitHub sites provide a wide array of content – documentation, scripts, how-to videos, reference models and tools–to help you get started, enabling you to easily build new or migrate existing models to systems built on Intel Gaudi accelerators.

Scalability

With the advent of generative AI and large language models has come the leap size and complexity of workloads. Now, it’s more essential than ever for data centers to be able to easily and cost-effectively scale-up and scale-out capacity—with some systems requiring up to hundreds or even thousands of processors to run training and deployments with with speed and accuracy. First- and second-generation Intel Gaudi processors were designed like no other—expressly to address scalability—with twenty-four 100-Gigabit Ethernet ports of RDMA over Converged Ethernet (RoCE) integrated into every Intel Gaudi 2 processor. The result is massive and flexible scaling capacity based on the industry’s networking standard already employed in virtually all data centers.

Scaling data center training with Intel Gaudi accelerator RoCE Networking

The unique networking design of twenty-four 100-Gigabit RDMA over Converged Ethernet (RoCE) ports integrated onto every Gaudi 2 processor gives data center system builders tremendous versatility—to flexibly and cost-efficiently scale systems from one to 1000s of AI processors. The San Diego Supercomputing Center team is leveraging the flexibility of RoCE connectivity in its Voyager supercomputer and is using Habana’s first-generation inference processors for model and application deployment. Voyager will serve academic researchers across a range of science and engineering domains—astronomy, climate sciences, chemistry and particle physics, just to name a few.

Researchers on the Voyager supercomputer benefit from training performance and accuracy with the Supermicro X12 Training Server based on Intel Gaudi accelerators, featuring eight Intel Gaudi cards and integrated host CPU, the Dual-socket 3rd Gen Intel® Xeon® Scalable processor, from inference with the Supermicro SuperServer 4029GP-T, containing eight GoyaTM HL-100 Inference PCIe cards and Dual-socket 2nd Gen Intel® Xeon® Scalable processors.

Massive AI training compute capacity with easy and efficient scale-out

6 X 400 GbE

scale-out node

- 7 100-GbE ports for all-to-all connectivity

- u 3 100-GbE ports for scale-out

- u 42 training nodes in Voyager

Seven 6-node racks

252 X 400GbE QSFP-DD

- Connect to centralized networking switch

AI Voyager Project