Habana Blog

News & Discussion

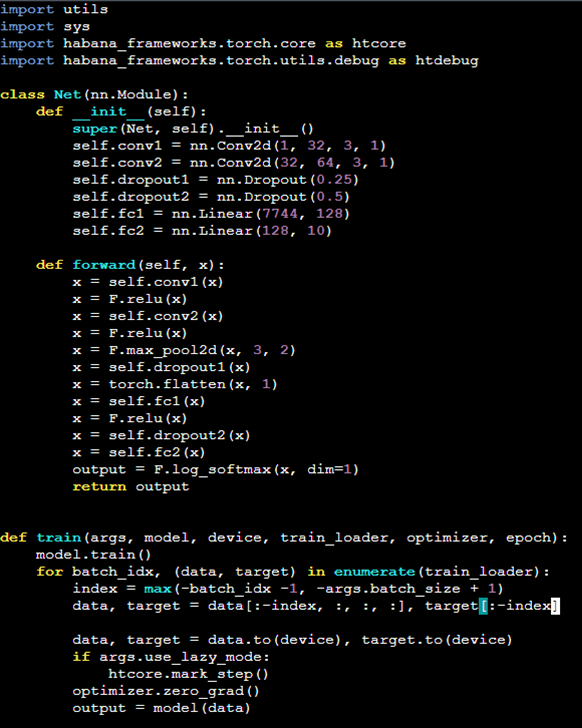

One of the main challenges in training Large Language Models (LLMs) is that they are often too large to fit on a single node or even if they fit, the training may be too slow. To address this issue, their training can be parallelized across multiple Gaudi accelerators (HPUs).

This Intel NewsByte was originally published in the Intel Newsroom. Habana Gaudi2 and 4th Gen Intel Xeon Scalable processors deliver leading performance and optimal cost savings for AI training. What’s New: Today, MLCommons published results of its industry AI performance benchmark, MLPerf Training 3.0, in which both the Habana® Gaudi®2 deep learning accelerator and the 4th Gen Intel® … Read more

Equus and Habana have teamed up to simplify the process of testing, implementing and deploying AI infrastructure based on Habana Gaudi2 processors. Customers can now leverage Equus Lab-as-a-Service to reach their innovation goals while addressing the challenges created by large-scale lab infrastructures. Gaudi2 is expressly architected to deliver high-performance, high-efficiency deep learning training and inference … Read more

AI is becoming increasingly important for retail use cases. It can provide retailers with advanced capabilities to personalize customer experiences, optimize operations, and increase sales. Habana has published a new Retail use case showing an example of finetuning a PyTorch YOLOX model for managing shelf space and shelf inventory in the Retail environment. This … Read more

In this article, you will learn how to use Habana® Gaudi®2 to accelerate model training and inference, and train bigger models with 🤗 Optimum Habana.

Our blog today features a Riken white paper, initially prepared and published by the Intel Japan team in collaboration with Kei Taneishi, research scientist with Riken’s Institute of Physical and Chemical Research. The paper should be useful for those in the medical research domain as it addresses research cases utilizing both computer vision and natural … Read more

Habana’s Gaudi2 delivers amazing deep learning performance and price advantage for both training as well as large-scale deployments, but to capture these advantages developers need easy, nimble software and the support of a robust AI ecosystem. This week our COO, Eitan Medina, connected with Karl Freund, founder and principal analyst of Cambrian-AI Research, to discuss … Read more