Habana Blog

News & Discussion

The Habana® team is excited to be at re:Invent 2022, November 28 – December 1. We’re proud that Amazon EC2 DL1 instances featuring Habana Labs Gaudi deep learning accelerators are providing an alternative to GPU-based EC2 instances, delivering a new level of performance and efficiency in developing, training and deploying deep learning models and workloads. … Read more

The Habana® team is excited to be in Dallas at SuperComputing 2022. We look forward to sharing the latest performance advances for Gaudi®2, expanded software support and partner solutions from Supermicro, Inspur and Wiwynn. Additionally, in our booth #1838 we’re displaying an expanding range of deep learning use cases. We invite you to stop by … Read more

Today MLCommons® published industry results for their AI training v2.1 benchmark that contained an impressive number of submissions, with over 100 results from a wide array of industry suppliers, with roughly 90% of the entries for commercially available systems, ~10% in preview (not yet commercially available) and a few in “research,” not yet targeting commercial … Read more

Gaudi and Gaudi2-based Servers Deliver Flexibility with Industry-standard Interoperability State-of-the-art deep learning applications require multiple processors in systems with multiple inter-card links running at high speed and with ample bandwidth. To provide flexible, high-speed interconnect across multiple ASIC solutions the OCP OAM (OCP Accelerator Module) specification defines the industry-endorsed form factor to enable interoperability across … Read more

One of the key challenges in Large Language Model (LLM) training is reducing the memory requirements needed for training without sacrificing compute/communication efficiency and model accuracy. DeepSpeed [2] is a popular deep learning software library which facilitates memory-efficient training of large language models. DeepSpeed includes ZeRO (Zero Redundancy Optimizer), a memory-efficient approach for distributed training [5]. ZeRO has … Read more

AI is transforming enterprises with valuable business insights, increased operational efficiencies and enhanced user experiences with innovative applications that can fuel growth. However, enterprise customers are challenged by the daily demands of balancing growing their businesses while developing, testing and managing AI and deep learning models, workloads and systems from start to application deployment. To … Read more

The Habana team is excited to share with you our deep learning technologies and invite you to see our demos featured at the Intel Innovation 2022 conference happening today and tomorrow at the San Jose McEnery Convention Center. Our focus for the conference is on creative AI applications possible with our new Gaudi2 Deep Learning … Read more

In this post, we will learn how to run PyTorch V-diffusion inference on Habana Gaudi processor, expressly designed for the purpose of efficiently accelerating AI Deep Learning models. Art Generation with V-diffusion AI is unleashing new and wide array of opportunities in the creative domain and one of them is text-to-image creative applications. In this … Read more

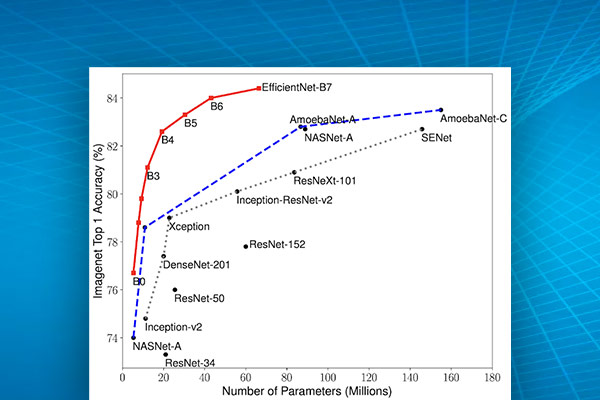

In this post, we will learn how to migrate a TensorFlow EfficientNet model from running initially on a CPU to a Habana Gaudi Training processor, expressly designed for the purpose of efficiently accelerating training of AI Deep Learning models. We will start by running the model on CPU. Then add the needed code to run … Read more

The Habana team is happy to announce the release of SynapseAI® version 1.6.0. In this release, we introduce preliminary inference capabilities on Gaudi. For further details, refer to the Inference on Gaudi guide. We have published a reference model, V-Diffusion to demonstrate the inference capabilities. We continued enabling new features with DeepSpeed, including support for ZeRO-2 … Read more

Much has happened at Habana since our last MLPerf submission in November 2021. We launched Gaudi®2, our second-generation deep learning training processor, and Greco™, our second-generation inference processor. We expanded our software functionality with SynapseAI®, which supports the latest versions of PyTorch, PyTorch Lightning, TensorFlow and openMPI. Our operator and model coverage is continually expanding … Read more

SynapseAI 1.5 brings many improvements, both in usability and in Habana ecosystem support. For PyTorch we removed the need for weight permutation, as well as the need to explicitly call load_habana_module. See Porting a Simple PyTorch Model to Gaudi for more information. Habana accelerator support has been upstreamed to PyTorch lightning v1.6 and integrated with … Read more

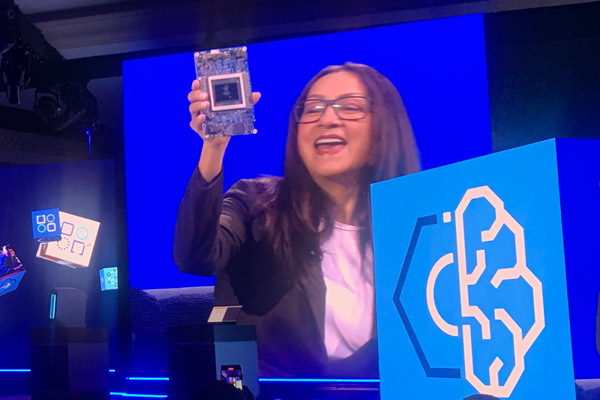

The Habana team is excited to have launched our next-gen 7nm training and inference processors, Gaudi2 for training and Greco for inference, this morning on stage at Intel Vision 22. Sandra Rivera, Executive Vice President and General Manager of the Datacenter and AI Group at Intel, announced Gaudi2 and its leap in performance and efficiency. … Read more

We are excited to announce the release of SynapseAI 1.4.1. This is the first release that enables SynapseAI training on Habana Gaudi®2 AI processors. With this release we have enabled TensorFlow Resnet50 and BERT-Large as well as PyTorch ResNet50 model training on Gaudi2. The detailed instructions are available on the Model References GitHub repository. Learn more about … Read more

As demand for deep learning applications grows among enterprises and startups, so does the need for high-performance, yet cost-effective cloud solutions for training DL models. With the Amazon EC2 DL1 Instance, users running computer vision and NLP workloads can train models at up to 40% lower cost than with existing GPU-based instances. In this session, … Read more

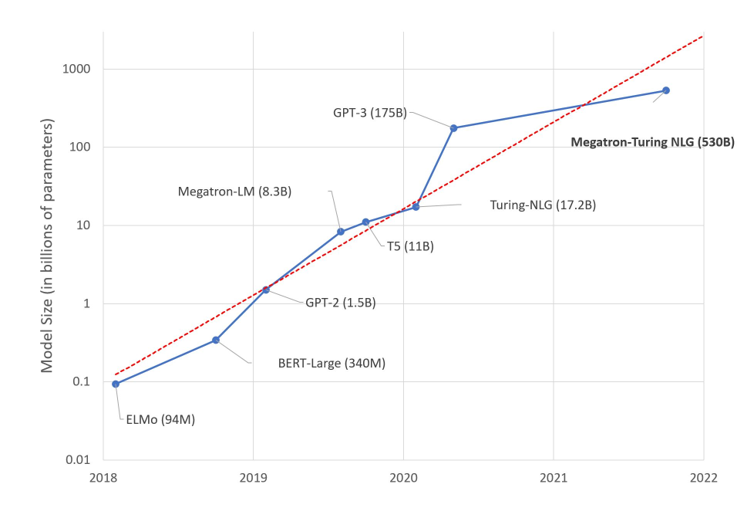

Powered by deep learning, transformer models deliver state-of-the-art performance on a wide range of machine learning tasks, such as natural language processing, computer vision, speech, and more. However, training them at scale often requires a large amount of computing power, making the whole process unnecessarily long, complex, and costly. Today, Habana® Labs, a pioneer in … Read more

The Habana(R) Labs team is pleased to augment software support for the Gaudi platform with the release of SynapseAI® version 1.4.0. In this release, we have made several version updates. We now support PyTorch 1.10.2 (previously 1.10.1), PyTorch Lightning 1.5.10 (previously 1.5.8). We continue to support TensorFlow 2.8.0 and 2.7.1, as previously. OpenMPI version was upgraded … Read more

The Habana team is pleased to be collaborating with Grid.ai to make it easier and quicker for developers to train on Gaudi® processors with PyTorch Lightning without any code changes. Grid.ai and PyTorch Lightning make coding neural networks simple. Habana Gaudi makes it cost efficient to train those networks. The integration of Habana’s SynapseAI® software … Read more

Earlier this year, Machine Learning Algorithm Developer, Chaim Rand, evaluated the Amazon EC2 DL1 instance based on the Habana Gaudi processor and demonstrated some of its unique properties. This was a sequel to a previous post in which he discussed using dedicated AI accelerators and the potential challenges of adopting them. In his first Medium … Read more

The Habana® Labs team is happy to announce the release of SynapseAI® version 1.3.0. In this release, we have made several version updates. We now support PyTorch 1.10.1 (previously 1.10.0), PyTorch Lightning 1.5.8 (previously 1.5.0), TensorFlow 2.7.1 (previously 2.7.0). In addition, we are removing support for TensorFlow 2.6.2 and have added support for the recently … Read more