In this post, we will learn how to migrate a TensorFlow EfficientNet model from running initially on a CPU to a Habana Gaudi Training processor, expressly designed for the purpose of efficiently accelerating training of AI Deep Learning models.

We will start by running the model on CPU. Then add the needed code to run it on a single Gaudi processor. Finally, we will enable distributed training using 8 Gaudi devices.

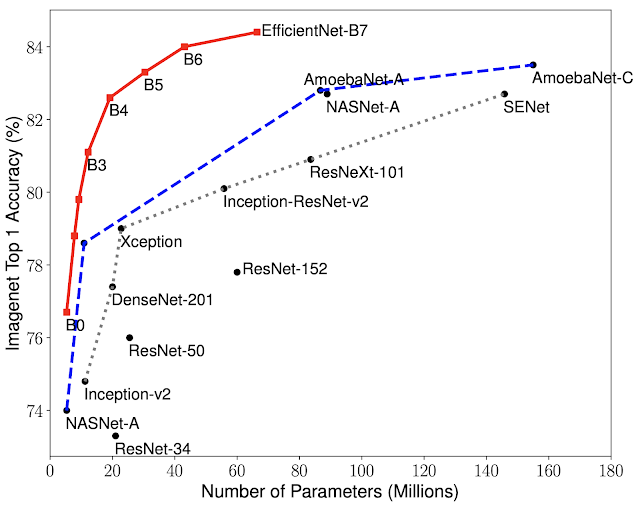

EfficientNet

A lightweight convolutional neural network architecture for image classification. EfficientNet achieving the state-of-the-art accuracy with an order of magnitude fewer parameters and compute, on both ImageNet and five other commonly used transfer learning datasets.

The model was first introduced by Tan et al. in EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks. In this post, we are going to use EfficientNet baseline model EfficientNet-B0 as the training example.

Setup

Start by making sure the latest Habana® SynapseAI® Software suite environment is properly setup. You can find the full instructions here.

Install the extra required packages for this model

python3 -m pip install gin-config tensorflow_addons tensorflow_datasets tensorflow-model-optimizationWe will be using Keras EfficientNet at https://github.com/tensorflow/models/tree/v2.8.0/official/vision/image_classification.

Clone the model code, and add it to the PYTHON path

git clone --depth 1 --branch v2.8.0 https://github.com/tensorflow/models.git

export PYTHONPATH=$PYTHONPATH:/home/ubuntu/models

cd models/official/vision/image_classificationTraining on CPU

First of all, let’s enable the training with synthetic data on a CPU and check its performance.

In the TensorFlow models repository, there are only EfficientNet configuration files for GPU and TPU. We will use the following Python command to override the existing configurations for GPU and run EfficientNet-B0 on CPU.

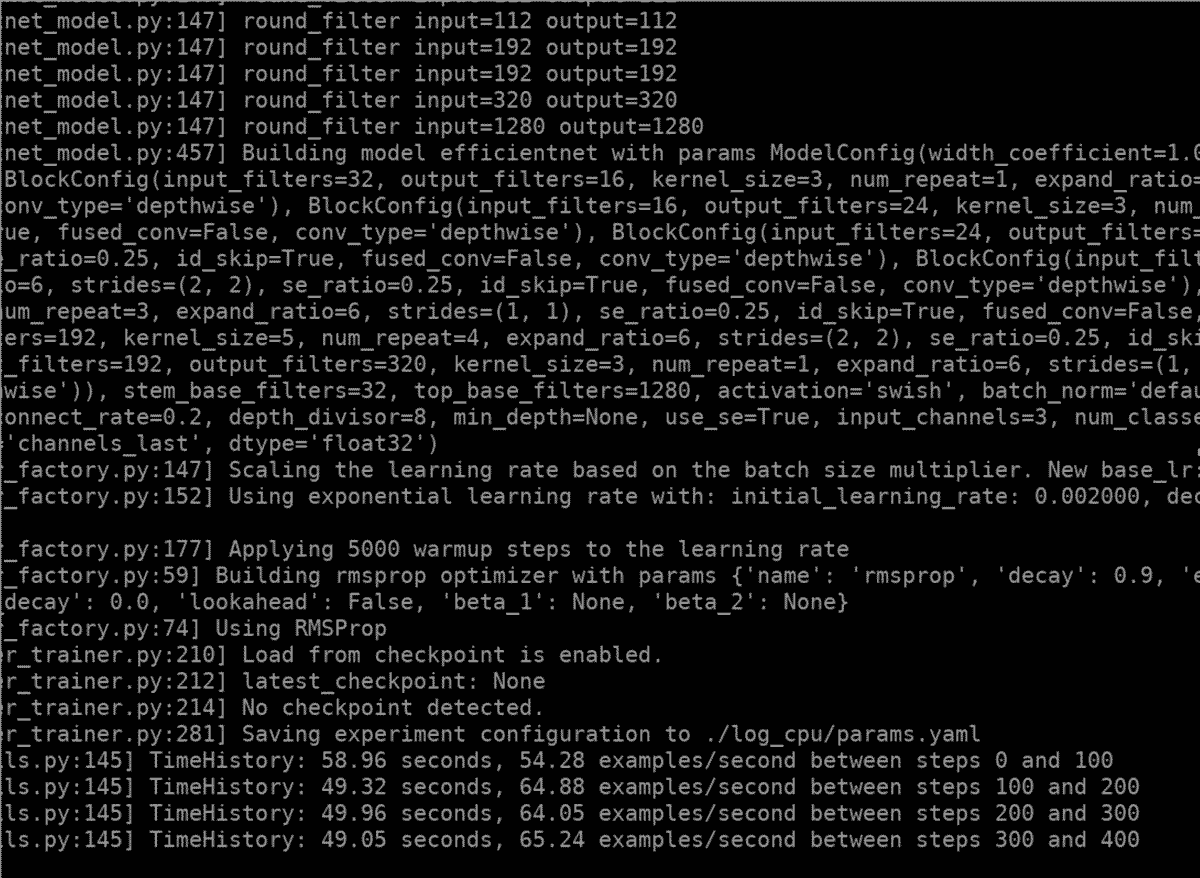

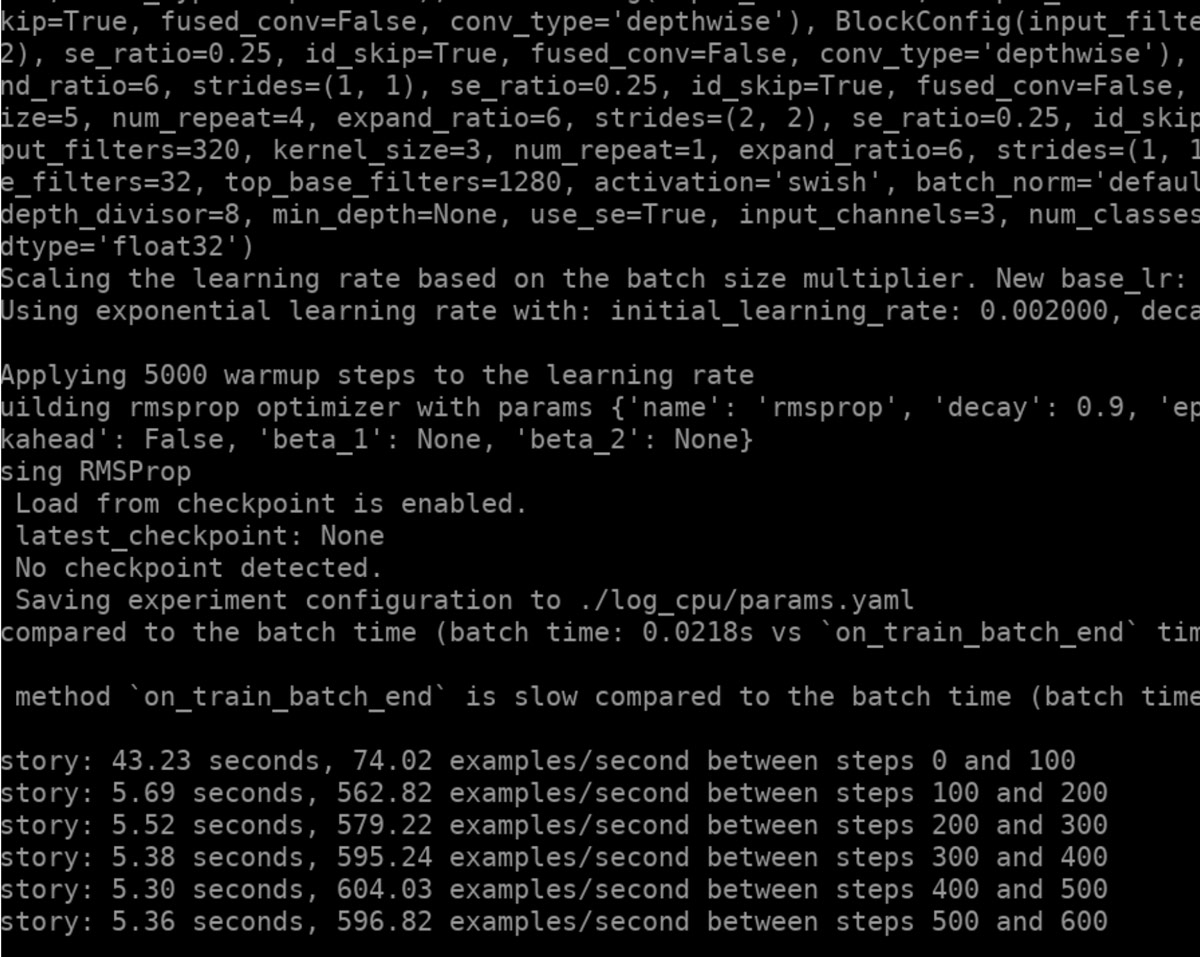

python3 classifier_trainer.py --mode=train_and_eval --model_type=efficientnet --dataset=imagenet --model_dir=./log_cpu --data_dir=./ --config_file=configs/examples/efficientnet/imagenet/efficientnet-b0-gpu.yaml --params_override='runtime.num_gpus=0,runtime.distribution_strategy="off",train_dataset.builder="synthetic",validation_dataset.builder="synthetic",train.steps=1000,train.epochs=1,evaluation.skip_eval=True'The Efficient-B0 training results on CPU appear as below:

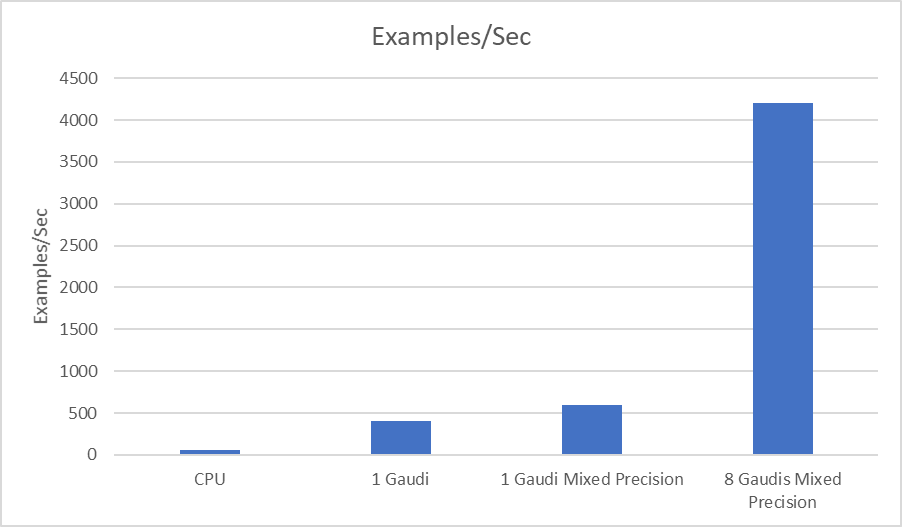

From the output log above, we can see that the throughput for EfficientNet-B0 training on CPU with synthetic data is around 60 examples/sec.

Training on a single Gaudi Processor

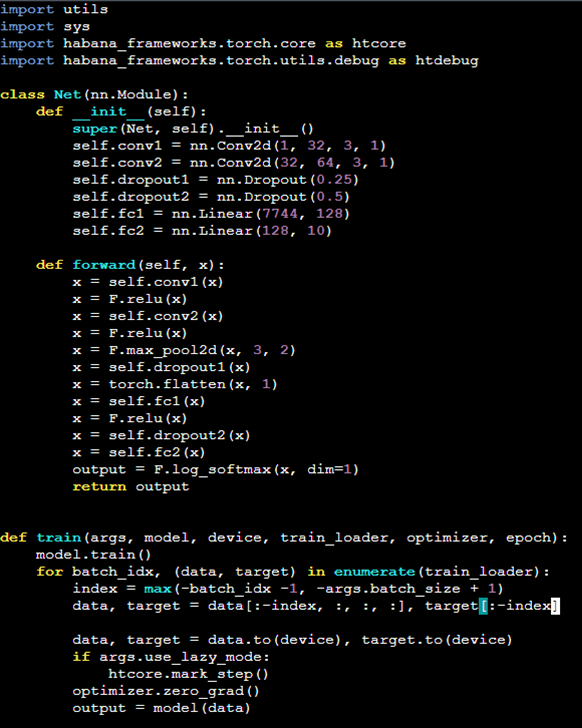

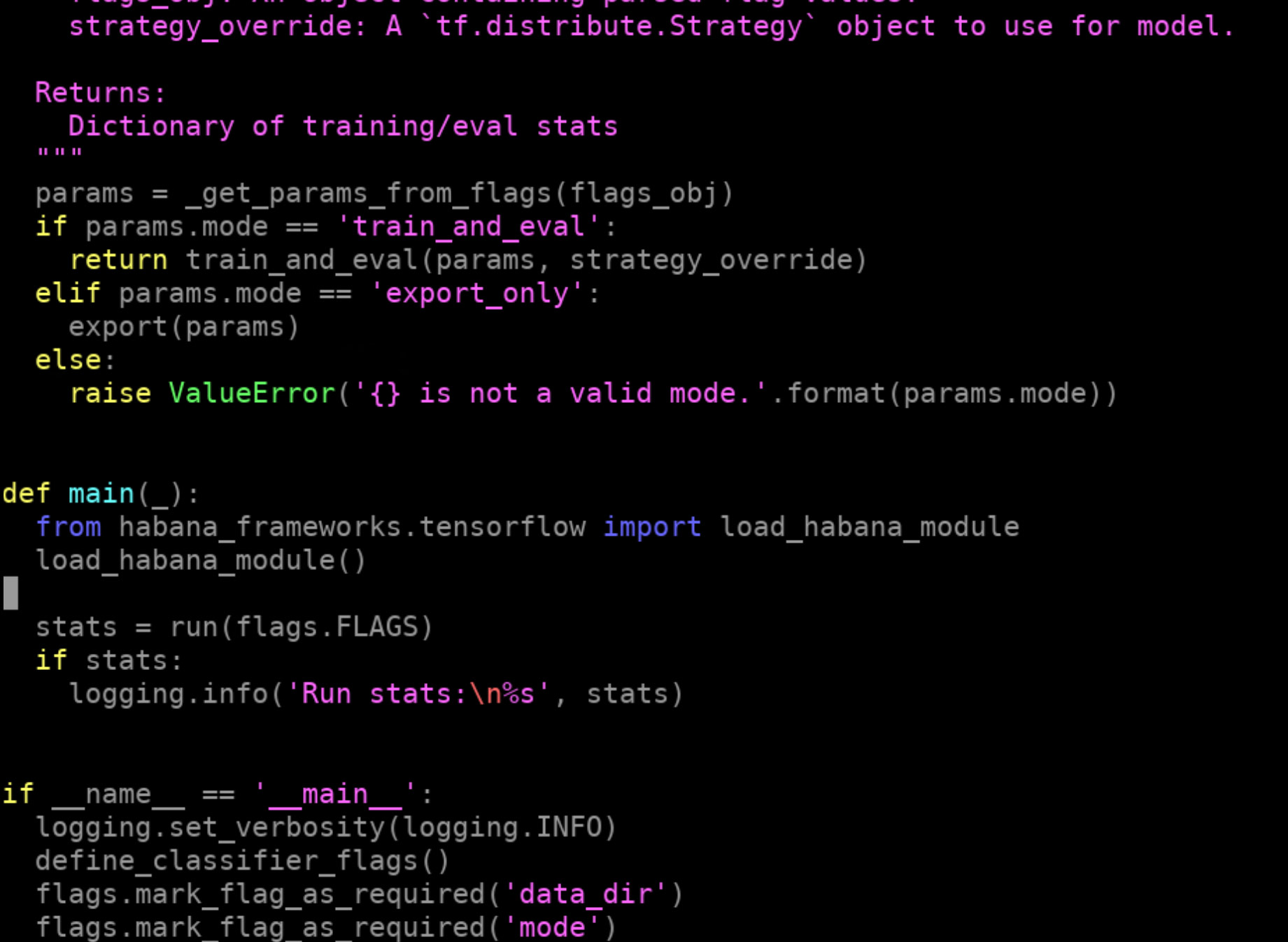

Now, let’s modify the training script to train it on a Gaudi device, also referred to as ‘HPU’. To do that we need to load the Habana library. Edit the file models/official/vision/image_classification/classifier_trainer.py and insert the following two lines of code in line 443:

from habana_frameworks.tensorflow import load_habana_module

load_habana_module()

Save the file and it’s all ready. We will use the same command as above to launch the training. This time EfficientNet will be trained using a single Gaudi device.

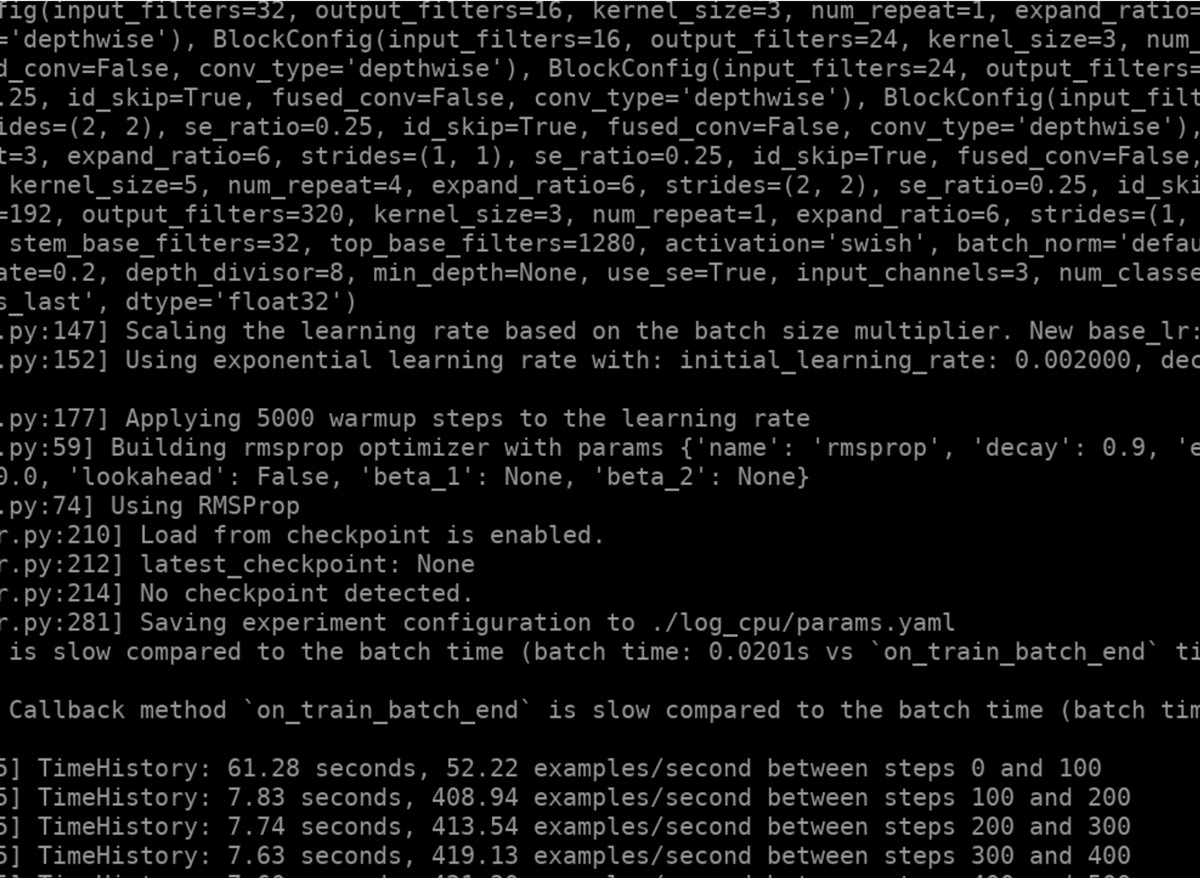

python3 classifier_trainer.py --mode=train_and_eval --model_type=efficientnet --dataset=imagenet --model_dir=./log_hpu --data_dir=./ --config_file=configs/examples/efficientnet/imagenet/efficientnet-b0-gpu.yaml --params_override='runtime.num_gpus=0,runtime.distribution_strategy="off",train_dataset.builder="synthetic",validation_dataset.builder="synthetic",train.steps=1000,train.epochs=1,evaluation.skip_eval=True'From the output log, we can see that the throughput for EfficientNet-B0 training on Habana Gaudi with synthetic data is around 400 examples/sec.

Training with Mixed Precision

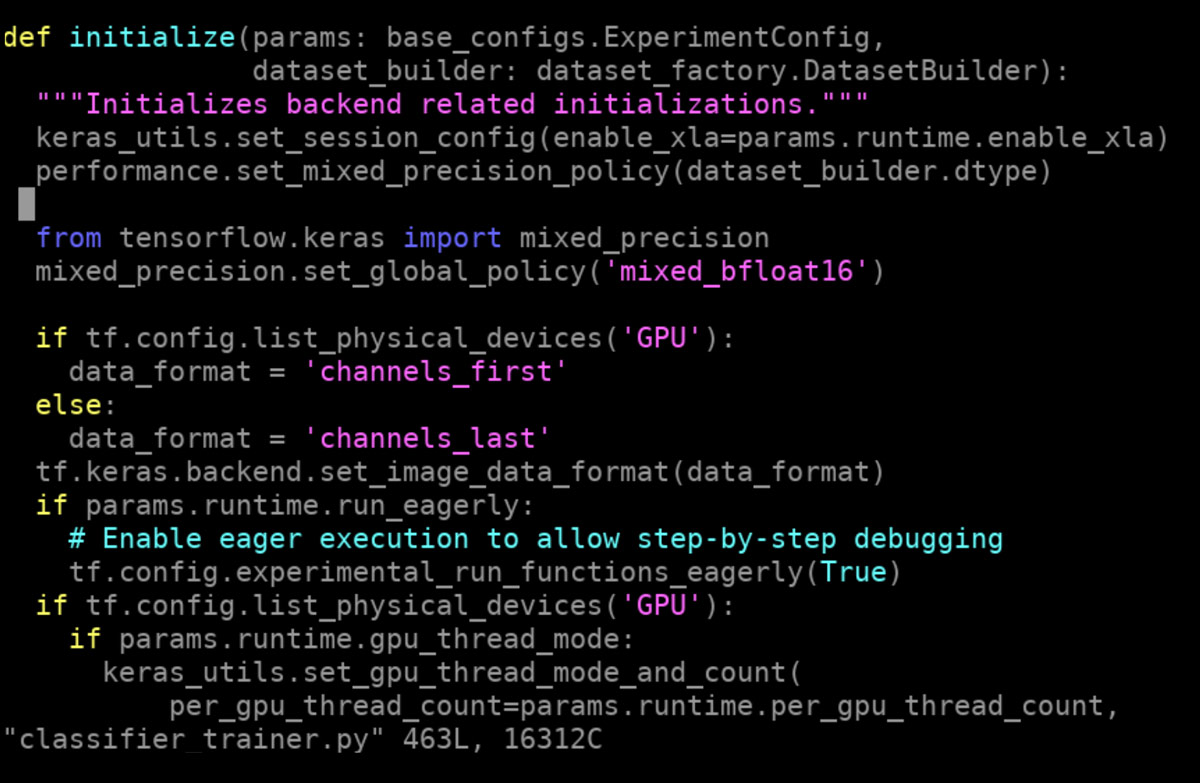

Mixed precision is the use of both 16-bit (bfloat16) and 32-bit floating-point types in a model during training to make it run faster and use less memory. You can enable mixed precision with SynapseAI and Gaudi processors using the Keras mixed precision API.

Edit the file models/official/vision/image_classification/classifier_trainer.py and replace line 231 with the following two lines of code:

from tensorflow.keras import mixed_precision

mixed_precision.set_global_policy('mixed_bfloat16')

Save the file and use the same command to launch the training.

python3 classifier_trainer.py --mode=train_and_eval --model_type=efficientnet --dataset=imagenet --model_dir=./log_hpu --data_dir=./ --config_file=configs/examples/efficientnet/imagenet/efficientnet-b0-gpu.yaml --params_override='runtime.num_gpus=0,runtime.distribution_strategy="off",train_dataset.builder="synthetic",validation_dataset.builder="synthetic",train.steps=1000,train.epochs=1,evaluation.skip_eval=True'From the output log, we can see that the throughput for EfficientNet-B0 training on Habana Gaudi with synthetic data is increased to almost 600 examples/sec.

Distributed Training on 8 Gaudis

Using Gaudi instead of CPUs already accelerated our training by roughly 10X. To increase our training throughput even further, we will use a technique called distributed training. Distributed training allows us to run the training workload on more than one device at the same time. In this example we will run it on 8 different Gaudis.

In the original source code, tf.distribute.Strategy is used to support the distributed training for TPU and GPU. We will use the tf.distribute APIs to enable the distributed training on multiple Gaudis using HPUStrategy. HPUStrategy is similar to the MultiWorkerMirroredStrategy, in which each worker runs in a separate process.

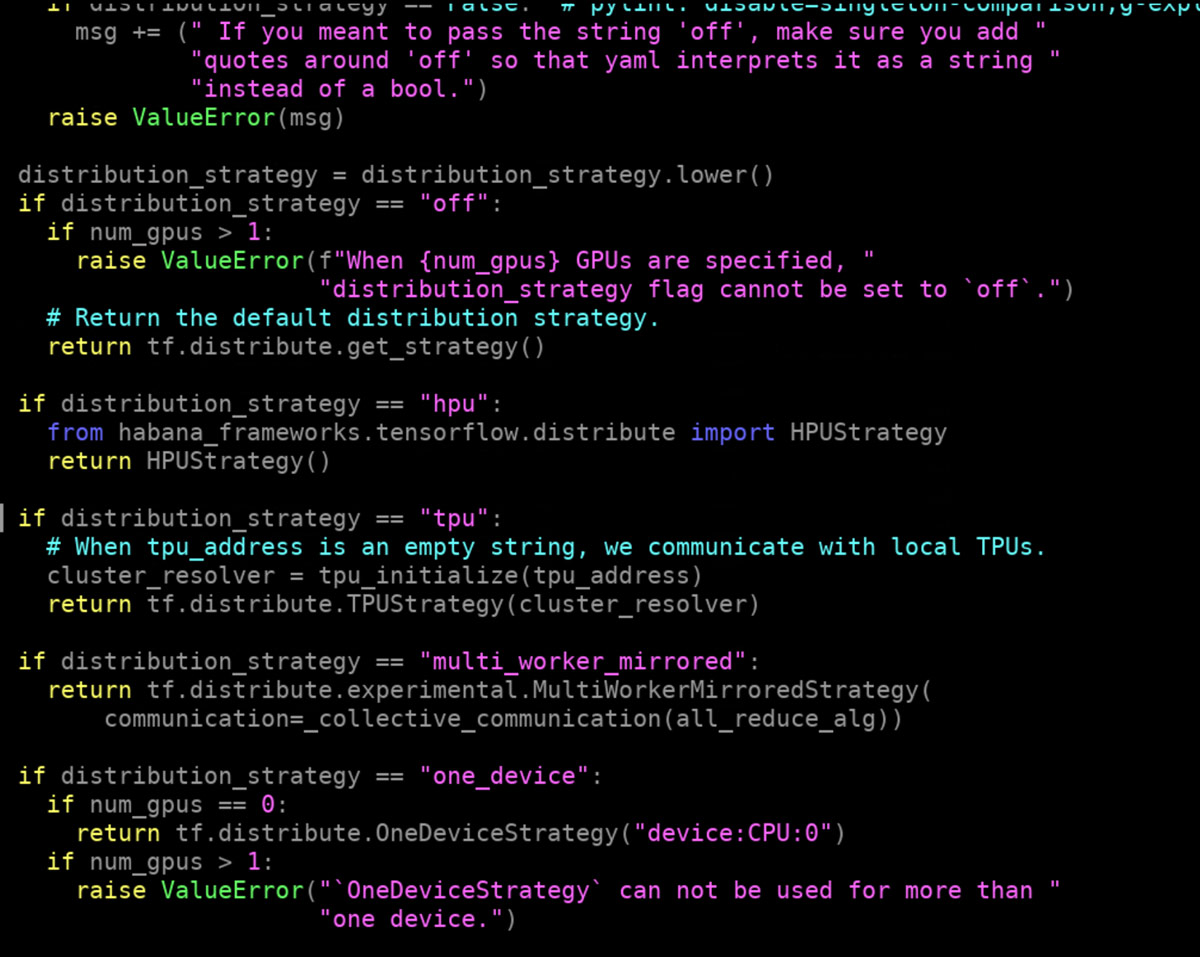

Edit the file models/official/common/distribute_utils.py and in line 148, insert the following code:

if distribution_strategy == "hpu":

from habana_frameworks.tensorflow.distribute import HPUStrategy

return HPUStrategy()And save the file.

We will use MPI in our example to launch the multiple worker processes. We will configure TF_CONFIG environment variable by re-using the existing distribute_utils.configure_cluster() function in the code:

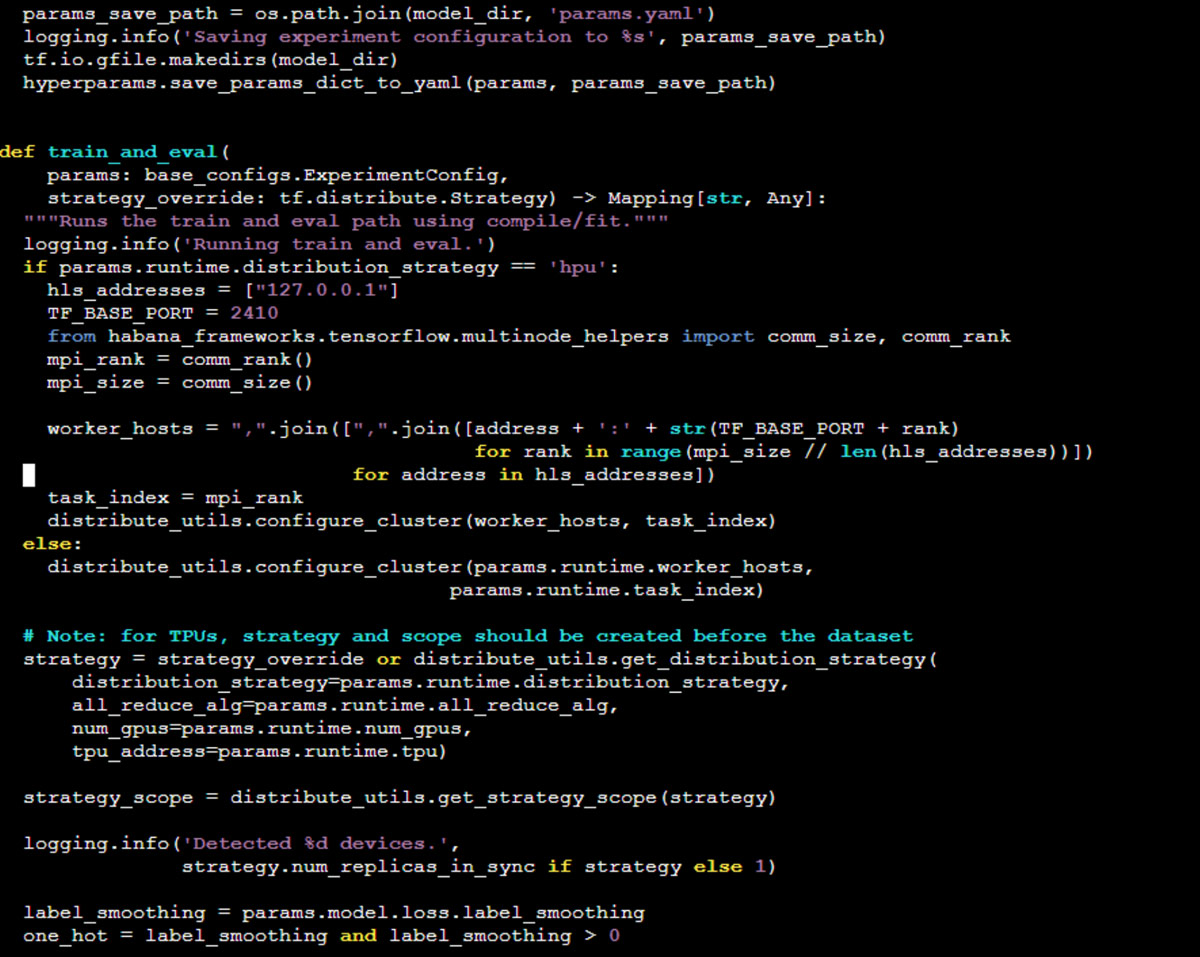

Edit the file models/official/vision/image_classification/classifier_trainer.py and replace line 292 and 293 with following code:

if params.runtime.distribution_strategy == 'hpu':

hls_addresses = ["127.0.0.1"]

TF_BASE_PORT = 2410

from habana_frameworks.tensorflow.multinode_helpers import comm_size, comm_rank

mpi_rank = comm_rank()

mpi_size = comm_size()

worker_hosts = ",".join([",".join([address + ':' + str(TF_BASE_PORT + rank)

for rank in range(mpi_size // len(hls_addresses))])

for address in hls_addresses])

task_index = mpi_rank

distribute_utils.configure_cluster(worker_hosts, task_index)

else:

distribute_utils.configure_cluster(params.runtime.worker_hosts,

params.runtime.task_index)And save the file.

Now we will launch 8 processes with mpirun command to start the distributed training for EfficientNet using 8 Gaudi devices with HPUStrategy:

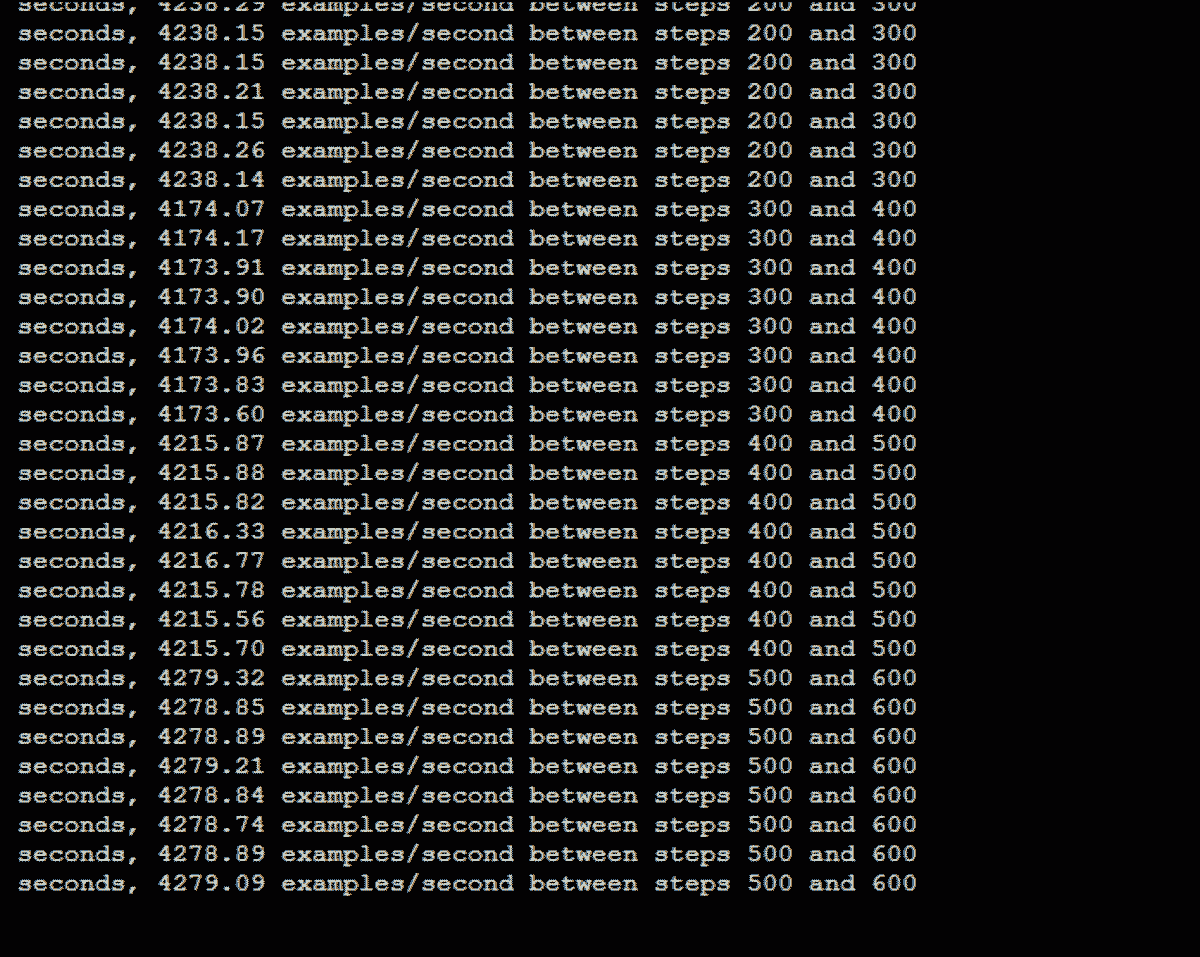

mpirun --allow-run-as-root -np 8 -x TF_ENABLE_BF16_CONVERSION=1 python3 classifier_trainer.py --mode=train_and_eval --model_type=efficientnet --dataset=imagenet --model_dir=./log_hpu_8 --data_dir=./ --config_file=configs/examples/efficientnet/imagenet/efficientnet-b0-gpu.yaml --params_override='runtime.num_gpus=0,runtime.distribution_strategy="hpu",train_dataset.builder="synthetic",validation_dataset.builder="synthetic",train.steps=1000,train.epochs=1,evaluation.skip_eval=True'Now, you can see that with 8 Gaudi devices, the training throughput is significantly improved to approximately 4200 images/sec.

Conclusion

In this post, we demonstrated how moving from CPU training to Gaudi accelerated training will provide a 75X increase in training throughput. As you have seen, there is no need to rewrite the training code. Further optimizations to the model training performance such as using mixed precision and distributed training is also easy to add thanks to the tight integration of SynapseAI with the leading deep learning training tools.