At Habana, we’re laser-focused on providing our customers, data scientists, developers, researchers and IT and systems administrators with the software, tools and support you need to put the price-performance, usability and scalability of Habana Gaudi® AI training solutions to work—whether you’re using Gaudi in the cloud, in your own data center or both. Today we’re introducing the Habana Developer Site, developer.habana.ai, which has been quietly in beta since April, being road-tested by developers for usability and efficacy. In the site, you will find the content, guidance, tools and support needed to easily and flexibly build new or migrate existing AI models and optimize their performance.

We believe our job of designing and developing the hardware for high-performance and efficient DL processors accounts for a relatively small portion of Habana’s effort; the majority is dedicated to leveraging that hardware with the right software, tools and support you need to make your workloads and models run efficiently, with accuracy and speed.

Of course, it all starts with the Gaudi hardware architecture. Designed for accelerating AI training workloads, it features eight fully programmable Tensor Processor Cores (TPCs) and configurable Matrix Math Engine. Gaudi provides you the flexibility to customize and innovate, with several workload-oriented features, including GEMM operation acceleration, tensor addressing, latency-hiding capabilities, random number generation, and advanced implementation of special functions. Gaudi is also the only AI processor that integrates ten 100 Gigabit Ethernet RoCE ports on-chip. This enables easy and efficient scaling from one to 1000s of accelerators and reduces component costs.

The developer journey begins with the Habana® SynapseAI® SDK. We won’t go into the details of the SDK itself here; if you want to learn more, please take a look at our Documentation page. The SynapseAI® Software Suite is designed to facilitate high-performance DL training on Habana’s Gaudi processors. It includes Habana’s graph compiler and runtime, TPC kernel library, firmware and drivers, and developer tools such as the Habana profiler and TPC SDK for custom kernel development.

SynapseAI is integrated with TensorFlow and PyTorch frameworks. TensorFlow integration is more mature compared to PyTorch since we started development on the latter two quarters later. As a result, PyTorch models will initially have lower performance (throughput and time-to-train) compared to similar TensorFlow models. We have documented the known limitations in our SynapseAI user guides as well as the reference models on GitHub. We will publish the performance results for reference models on both the Habana Developer site as well as the Model-References GitHub repository. The Habana team is committed to improving both usability and performance in subsequent releases.

We know there is much to be done, and we are counting on you to explore Gaudi and provide us your feedback and requests. We are looking forward to engaging with you through our Developer Site.

Developer Site

Developer.habana.ai is the hub for Habana developers and IT and systems administrators where you can find Gaudi software, optimized models, documentation, and “how to” content. It also hosts a Forum for the Habana developer community. The site has been designed for fast, easy navigation to exactly what you need to build or migrate models to Gaudi or to build Gaudi-based on-premises systems. You’ll see the developer site comprises four major content sections: Documentation, Resources, Hardware Software Vault and Habana Community Forum, and links directly to the Habana GitHub.

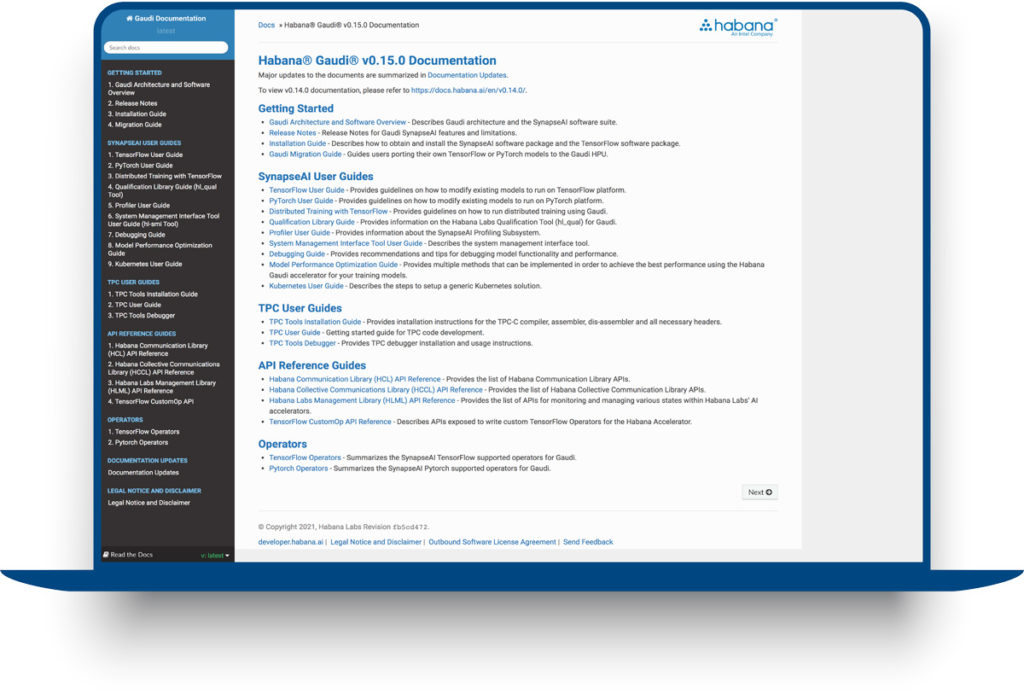

Documentation

Habana’s Documentation section hosts detailed documentation for Getting Started on Gaudi, SynapseAI User Guides, TPC User Guides, API Reference Guides and Operators. It is web-based and searchable with content based on the latest software release and with archive of the prior software release documentation. We also provide developers with “Documentation Update,” which summarizes major updates from the previous software release.

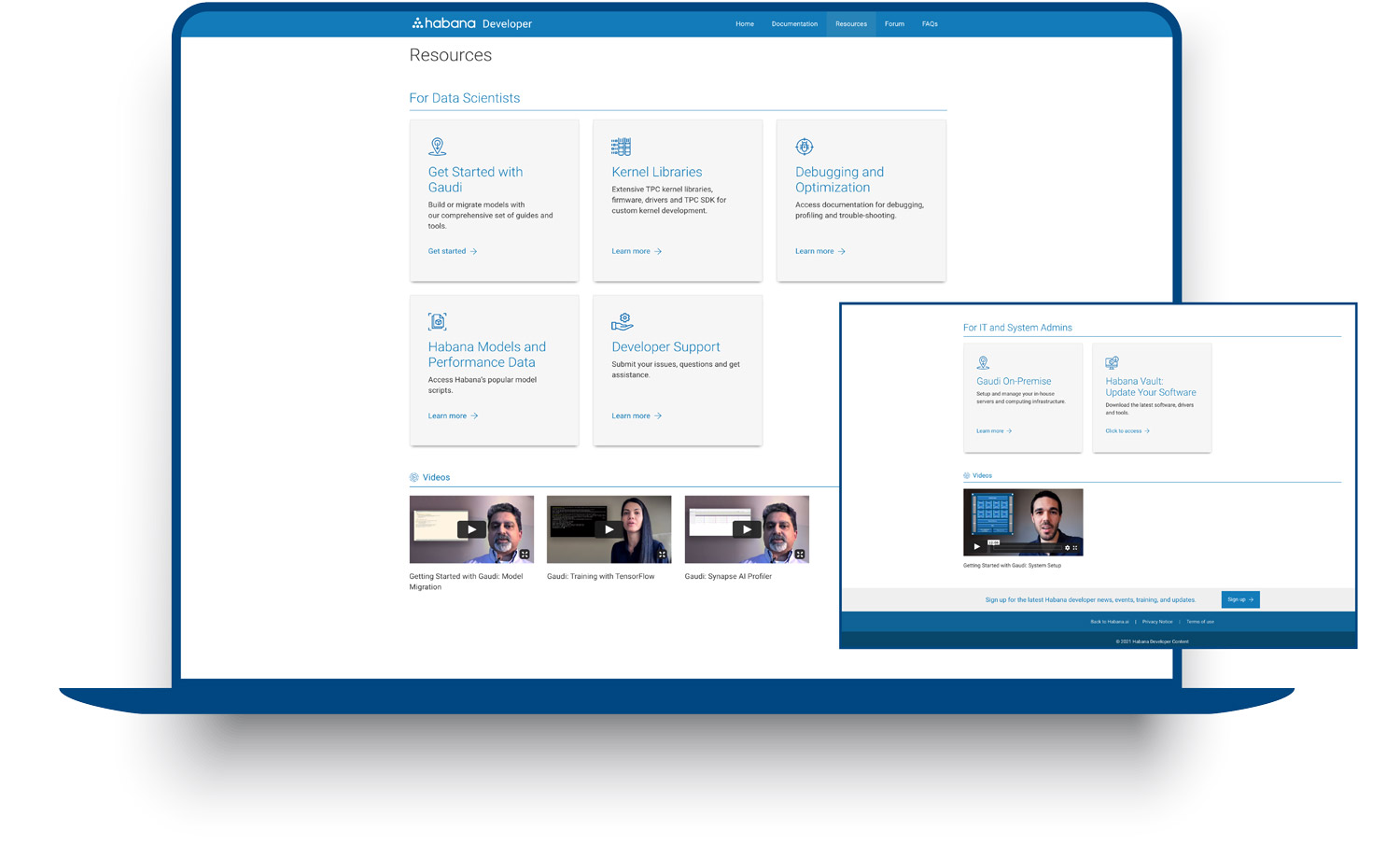

Resources

The Resources area of the developer site provides easy navigation to information, guides, content and software necessary to implement Gaudi-based models and systems. For the Gaudi developer community, we’ve assembled how to videos, guides and tools. Topics include:

- Getting Started with Gaudi, including basic steps for model build and migration

- Kernel Libraries and the Tensor Processor Core SDK for custom kernel development

- Debugging and Optimizing Models

And for IT and Systems Administrators building Gaudi-based systems on premise, we provide guidance on set-up and management for Gaudi servers and computing infrastructure.

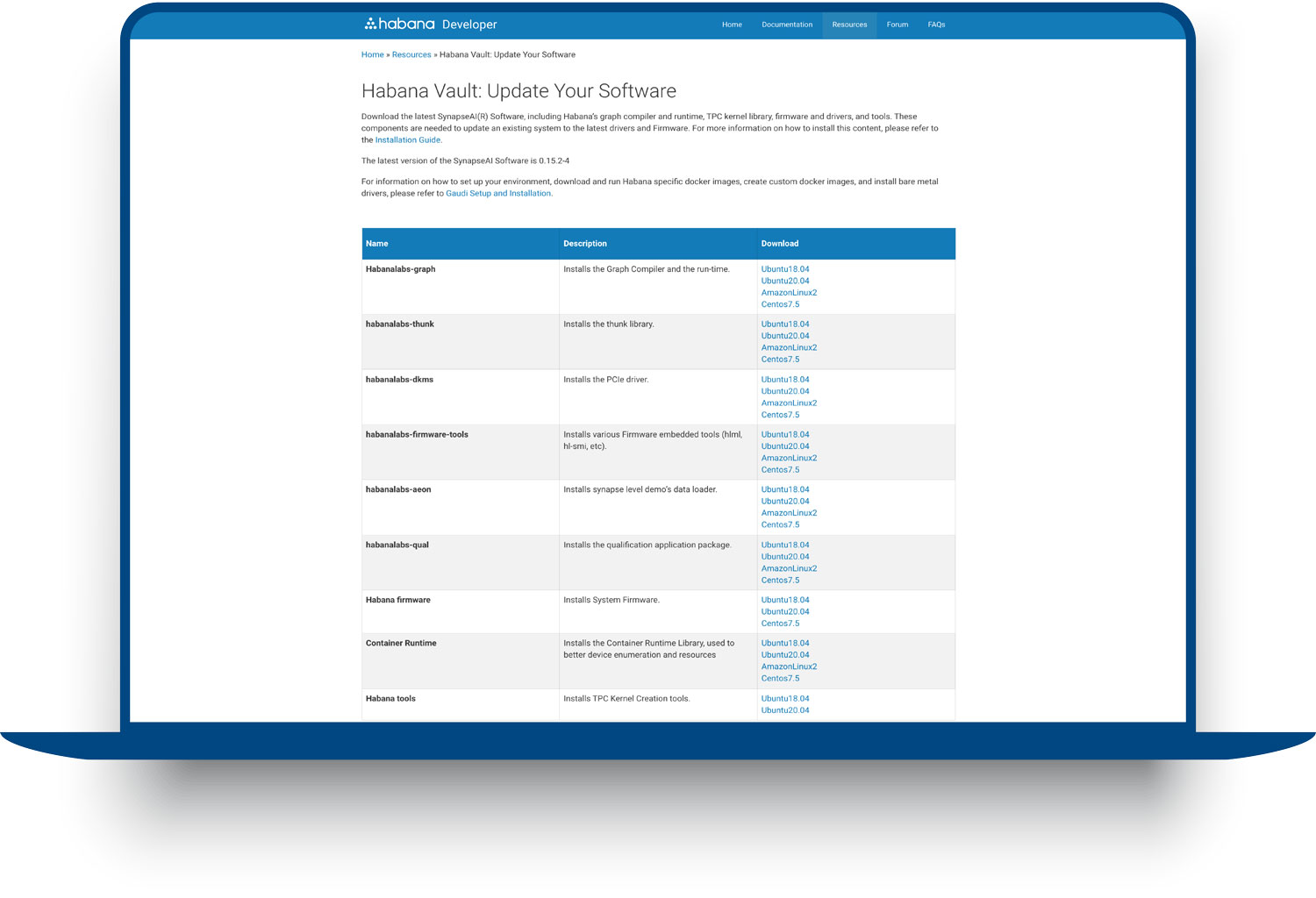

Software Vault

The Habana Vault provides access to Habana’s software releases. Publicly available content is provided via the Vault’s open access, and NDA access is provided to Habana’s ODM and OEM partners. The Vault contains publicly available official releases of SynapseAI®. This includes Habana graph compiler and runtime, TPC kernel library, firmware and drivers, and tools needed to update an existing system to the latest drivers and firmware. The Vault also hosts the SynapseAI TensorFlow and PyTorch Docker container images, which can be deployed easily and consistently, regardless of whether the target environment is a private data center or public cloud. For users interested in building their own Docker images, the Setup and Install repository on Habana GitHub provides a repository with Docker files and build instructions.

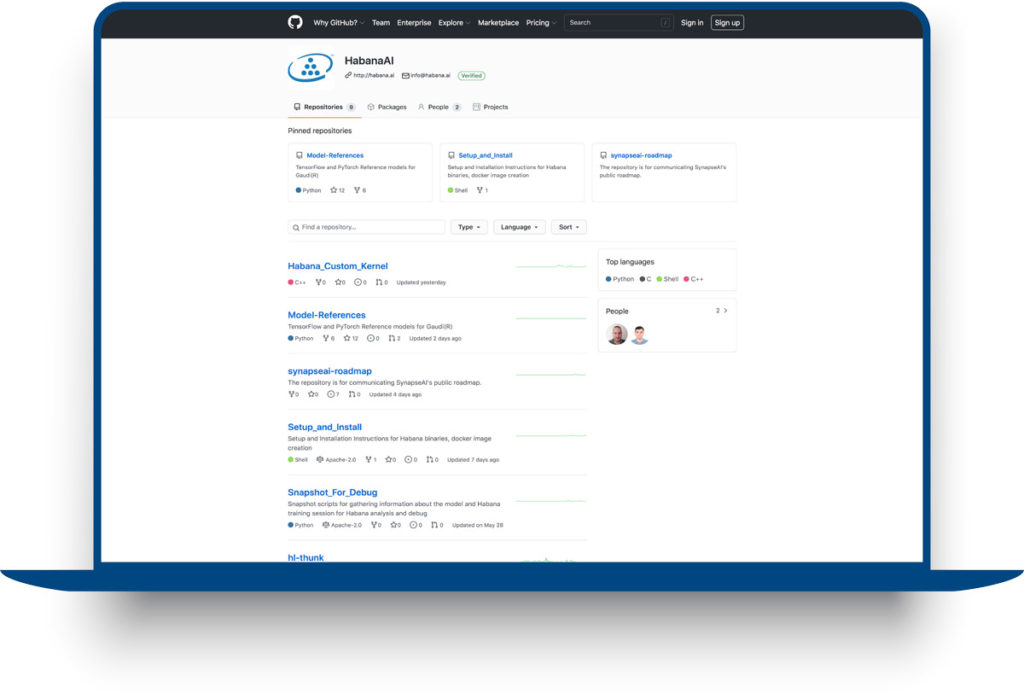

Habana Github

The Habana GitHub is easily navigated from Habana’s Developer Site or directly at https://github.com/HabanaAI. It contains repositories for our TensorFlow and PyTorch reference models, setup and install instructions for Habana binaries and docker creation, and our public SynapseAI® roadmap. GitHub also enables users to log issues and obtain support from Habana on a timely basis.

The Setup and Installation repository contains instructions on how to access and install Habana drivers on bare metal. It provides Docker files and instructions to build your own Docker images for Synapse AI with TensorFlow or PyTorch. This repository contains guidance on setting up the environment with Gaudi firmware and drivers, installing SynapseAI TensorFlow and PyTorch containers, and running with containers and running with containers. The Reference Models repository contains examples of DL training models implemented on Gaudi. Each model is supported with model scripts and instructions on how to run these models on Gaudi. We are committed to expanding our model coverage continuously and provide a wide variety of examples for users.

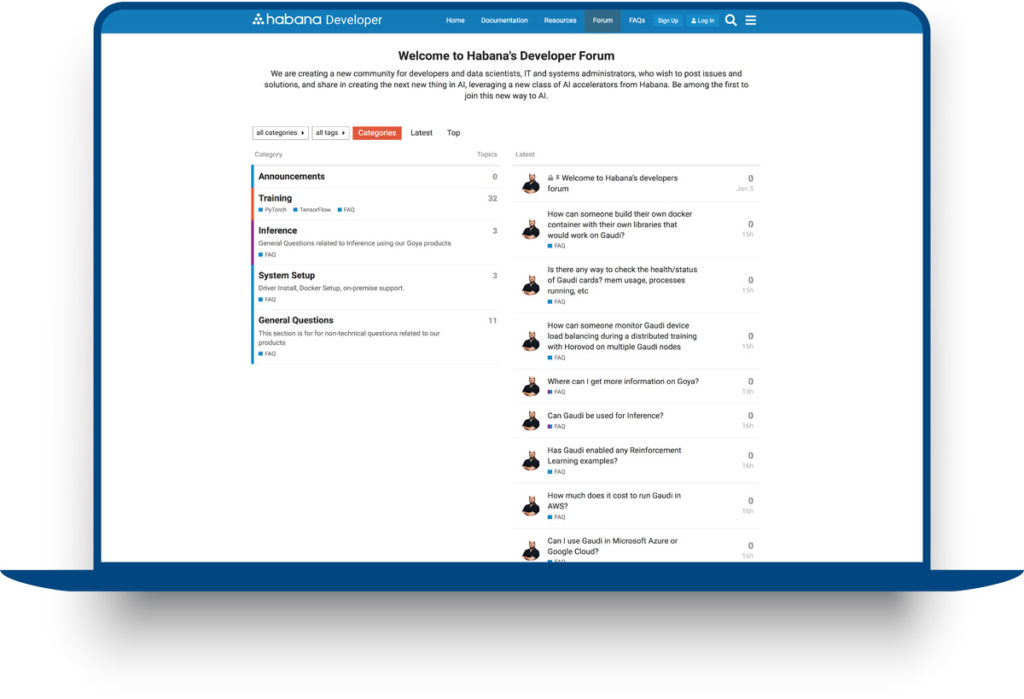

Habana Community Forum

The Forum is a dynamic resource for the developer community to access answers to their questions when implementing or managing Gaudi-based systems, and to share their own insights and perspectives with others who are working with Habana and Gaudi. The Forum is in a nascent state as it was opened to members and visitors only recently. We invite you to join the Forum to be ‘among the first’ members and to help build a robust and vital community of AI thought-leaders and builders who seek to leverage the unprecedented benefits of AI training and inference with purpose-built AI processors, Gaudi and Goya and their coming generations For quick access to frequently asked questions, you will find the Forum contains an FAQ. Habana experts will be available to help answer your questions and support you on the Forum. You will also be able to get support by filing issues on the Habana GitHub.

Come join us!

We encourage you to check out our Developer Site if you’ve not done so by now. The Habana team is looking forward to learning and creating more as we engage with users of the Developer Site, learning from your requirements, as well as your requests. Please check it out and let us know what you think and what you’d like to see—if it’s not already there at [email protected]

Authored by: Sree Ganesan, AI SW Product Management at Habana Labs