Habana Gaudi’s compute efficiency and integration bring new levels of price-performance to both cloud and on-premise data-center customers. Cnvrg.io MetaCloud is transforming the way enterprises manage, scale and accelerate AI and data science development from research to production and offers unrivaled flexibility to run on-premise, cloud or both. Habana and cnvrg.io have partnered to bring together the best of both worlds for AI developers.

Gaudi now powers instances on the AWS cloud. The AWS Gaudi-based EC2 DL1 instances feature 8 Gaudi accelerators and deliver up to 40% better price/performance than current GPU-based EC2 instances for training DL models. This significant cost-performance advantage makes Habana Gaudi a compelling addition to enterprises’ AI compute portfolio. Enterprises can now easily deploy Gaudi’s AI computational power and cost-efficiency with cnvrg.io MLOPs platform. AI developers can reduce the cost to train models, and innovate faster by optimizing training capacity, effectively merging their on-premises capacity with Amazon EC2 DL1 instances powered by Gaudi accelerators. Furthermore, since cnvrg.io technology is seamlessly integrated with CPU, GPU, and Habana Gaudi, it offers enterprises complete flexibility to leverage the compute platform that is best suited for each of their AI workloads without the time and overhead of manually deploying and configuring hardware.

Getting started with Habana Gaudi on cnvrg.io first requires setting up an Amazon EKS cluster using DL1 EC2 instances and then enabling them in cnvrg. You can review the setup and installation instructions on the cnvrg documentation. We will now share how you can get started with your model training on Gaudi, after completing the setup and installation.

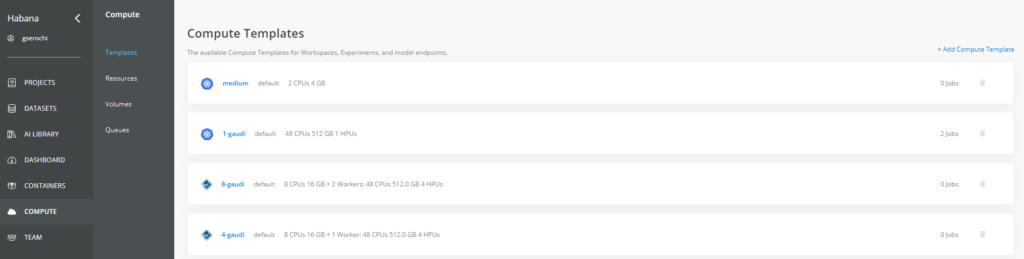

In cnvrg, Kubernetes clusters and on-premises machines are referred to as compute resources. cnvrg seamlessly integrates on-premises and cloud compute resources easily. Figure 1 below shows examples of Gaudi HPU (Habana Processing Units) compute templates.

The Gaudi developer journey begins with the Habana® SynapseAI® SDK. We won’t go into the details of the SDK itself here; if you want to learn more, please visit our Documentation page. The SynapseAI® Software Suite is designed to facilitate high-performance DL training on Habana’s Gaudi processors. It includes Habana’s graph compiler and runtime, TPC kernel library, firmware and drivers, and developer tools such as the SynpaseAI profiler and TPC SDK for custom kernel development. SynapseAI is integrated with TensorFlow and PyTorch frameworks.

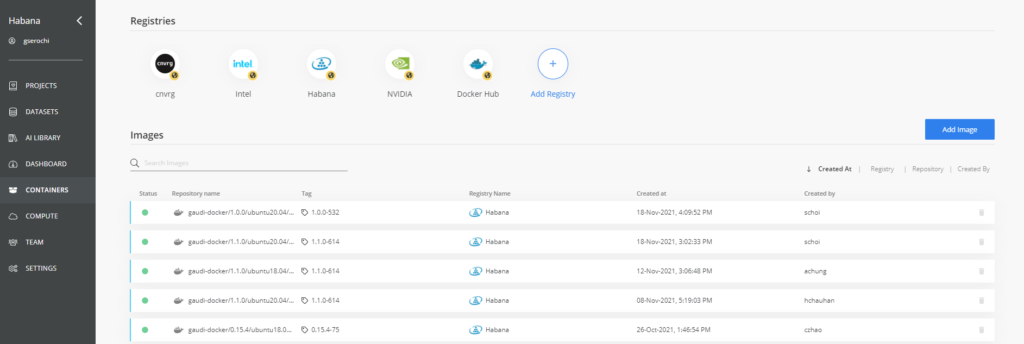

The Habana Vault hosts the SynapseAI TensorFlow and PyTorch Docker container images and is integrated in cnvrg Registries. Figure 2 shows various registries including cnvrg, Intel OneContainer and Habana Vault in addition to other vendor registries. The Images section in the figure shows the available Gaudi Docker container images with the SynapseAI release version in the Tag field.

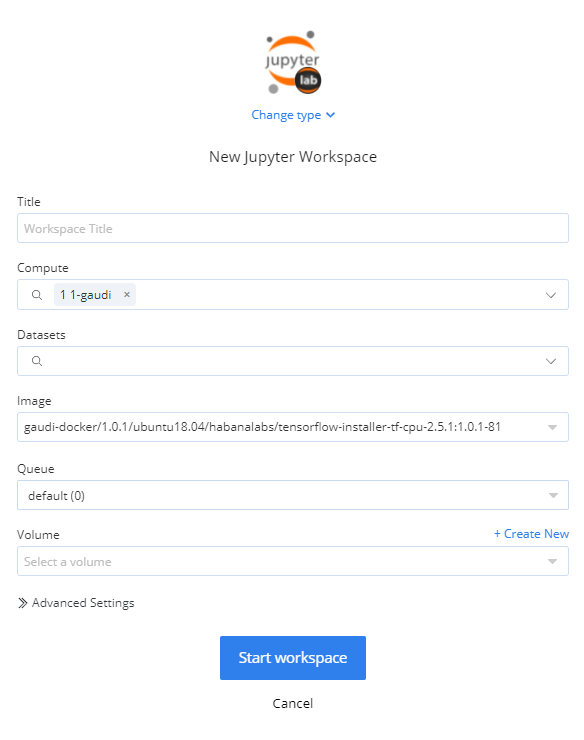

You can now bring up a new Jupyter Workspace, select the appropriate Gaudi compute and Docker image, as shown in Figure 3.

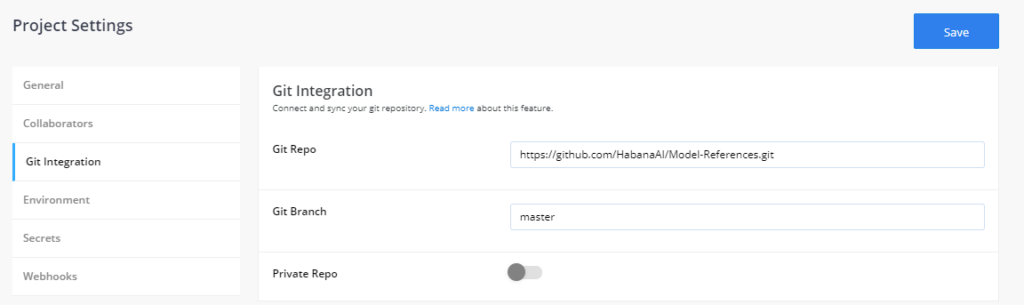

The Habana Reference Models GitHub repository contains examples of DL training models that have been ported over to Gaudi. Each model comes with model scripts and instructions on how to run these models on Gaudi. Today, our Reference Models repository features over twenty popular vision and NLP models, and developers can easily get started with training on Gaudi using these. We have a roadmap to expand our operator and model coverage along with software features. The Gaudi reference model roadmap is also available on Habana’s GitHub.

Developers who wish to get started with the Habana reference models will simply need to add the repo location in the cnvrg Project Settings Git Integration page, as shown in Figure 4.

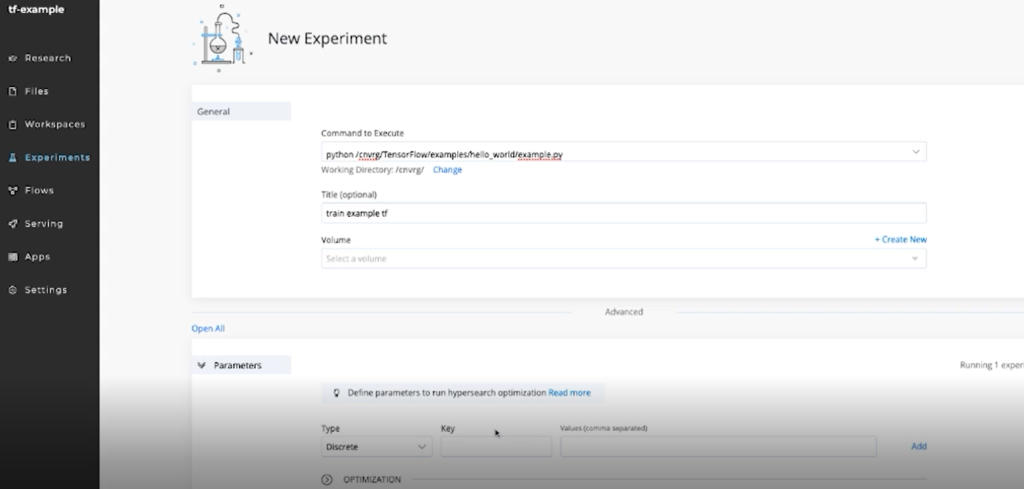

Now you can start a new Experiment in cnvrg and provide the command line to execute, as shown in Figure 5. Here we are using the TensorFlow hello_world example from Habana reference models repository.

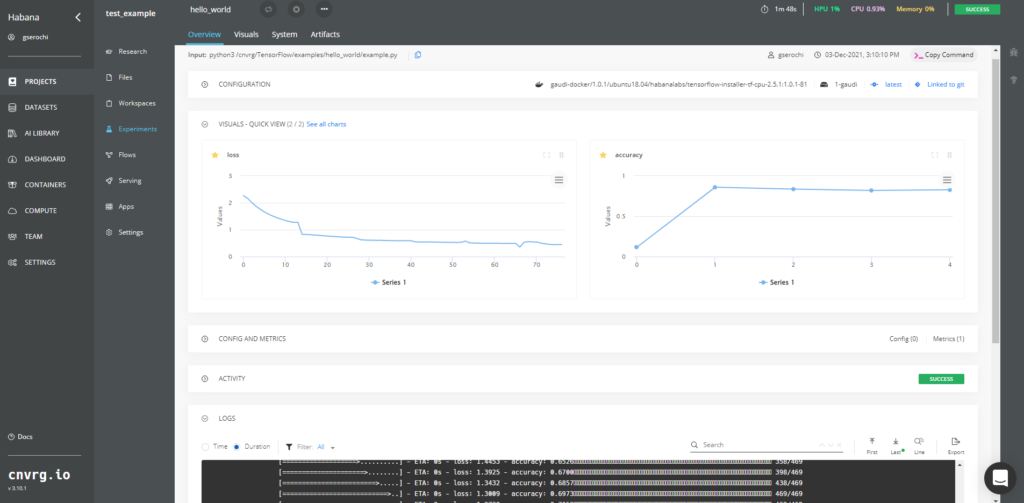

As shown in Figure 6, after execution starts, the output is displayed in the Logs section. While model training progresses, you can view the metrics (for example, loss and accuracy curves) in the Visuals section.

The Habana Developer Site is the main portal for Gaudi developers. You can find resources to get started with Gaudi, including quick-start guides, tutorials, and videos. It also contains information on the SynapseAI software, optimized models, documentation, and hosts the Forum for the Habana developer community.

In summary, cnvrg.io Metacloud enables AI developers to easily deploy Habana Gaudi for AI training. Developers can now choose the Gaudi-accelerated Amazon EC2 DL1.24xlarge instances that yield up to 40% better price-performance than GPU-based instances and take advantage of Gaudi’s cost efficiency for their deep learning training needs. We are excited to offer developers greater flexibility, lower costs, and higher productivity for AI training.

This is just the beginning of our journey. We are looking forward to engaging with the DL community using cnvrg.io with Gaudi in the cloud (via the Amazon EC2 DL1 instances) and on premise. We know there is much more be done and are counting on you to provide us with your feedback and requests via the Habana Forum.